・2018/08/10

【失敗版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

【失敗版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

(ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法)

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

● Raspberry Piで Google DeepDreamを動かしてキモイ絵を量産する方法

DeepDreamを動かすには Caffeと言う Deep Learning Frameworkを使用します。

Raspberry Piで Google DeepDreamを動かして一時期流行したキモイ絵を量産します。

DeepDream - Wikipedia

DeepDreamで生成した画像の例(Wikipediaより引用)

・DeepDream

● Raspberry Piで Caffe Deep Learning Frameworkで DeepDreamする方法

・2018/08/04

【ビルド版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning Framework

ラズパイで Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

・2018/08/04

【インストール版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning

ラズパイで Caffe Deep Learning Frameworkをインストールして Deep Dreamを動かしてキモイ絵を生成する

・2018/08/06

Orange Pi PC 2の 64bitのチカラで DeepDreamしてキモイ絵を高速でモリモリ量産してみるテスト

OrangePi PC2に Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

●今回動かした Raspberry Pi Raspbian OSのバージョン

RASPBIAN STRETCH WITH DESKTOP

Version:June 2018

Release date: 2018-06-27

Kernel version: 4.14

pi@raspberrypi:~/pytorch $ uname -a

Linux raspberrypi 4.14.50-v7+ #1122 SMP Tue Jun 19 12:26:26 BST 2018 armv7l GNU/Linux

● Raspberry Piで TensorFlowをコマンドラインで簡単にインストールする

補足追加:

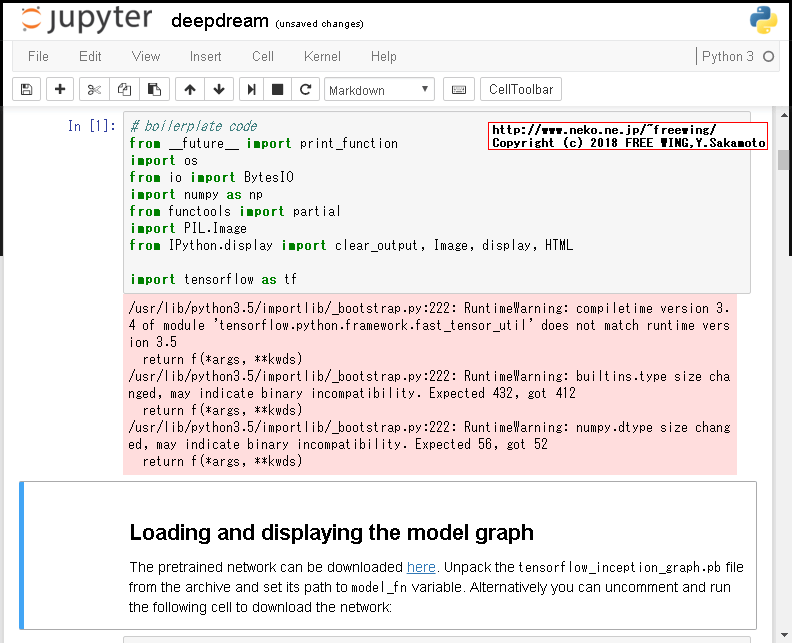

TensorFlowを Raspberry Piにインストールする場合、TensorFlow公式のコマンドラインでインストールする手順が一番簡単ですが、2018年 8月の現時点では pip3 install tensorflowした tensorflowのライブラリと、ラズパイの Pythonのバージョンとで不整合が有る感じで、RuntimeWarningが発生します。関連性は分かりませんが、結果 Bus errorで動きませんでした。

pip3 install tensorflowの動作対象の Pythonは Python 3.4

ラズパイの Pythonは Python 3.5

RuntimeWarningの内容

RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

と言う訳でラズパイで TensorFlowを動かす為の解決方法をググっていたら下記の TensorFlow for Armを見つけました。

下記のバージョンはラズパイの Python 3.5でも問題無く動きました。

・2018/08/14

【成功版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法

● Raspberry Piで TensorFlowをコマンドラインで簡単にインストールする

この記事は TensorFlowを最初に動かそうとして試行錯誤した【失敗版まとめ】です。

ちなみに TensorFlowは「テンソルフロー」と読みます。

Installing TensorFlow on Raspbian

tensorflow/tensorflow

TensorFlowを Raspberry Piにインストールする場合、TensorFlow公式のコマンドラインでインストールする手順が一番簡単です。

Raspberry Pi上でソースリストからビルドする場合は 24時間以上掛かると TensorFlow公式のガイドに書いてあります。

「it can take over 24 hours to build on a Pi,」

ソースリストからビルドする場合はパソコン上でラズパイ用の実行バイナリを生成するクロスコンパイルを薦めています。

● Installing TensorFlow on Raspbian

# Installing TensorFlow on Raspbian

# お決まりの sudo apt-get updateで最新状態に更新する

sudo apt-get update

● Python 3系の場合

# Installing TensorFlow on Raspbian

# Python 3.4+(Python 3.4以上)

python3 -V

# Python 3.5.3

# for Python 3.n

pip3 -V

# pip 9.0.1 from /usr/lib/python3/dist-packages (python 3.5)

# for Python 3.n

sudo apt-get -y install python3-pip

sudo apt -y install libatlas-base-dev

# Python 3.n

pip3 install tensorflow

# Downloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.9.0-cp35-none-linux_armv7l.whl (66.7MB)

# tensorflow-1.9.0

● Python 2系の場合

# Installing TensorFlow on Raspbian

# Python 2.7

python -V

# Python 2.7.13

# for Python 2.7

pip -V

# pip 9.0.1 from /usr/lib/python2.7/dist-packages (python 2.7)

# for Python 2.7

sudo apt-get -y install python-pip

sudo apt -y install libatlas-base-dev

# Python 2.7

pip install tensorflow

# Downloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.9.0-cp27-none-linux_armv7l.whl (66.7MB)

pi@raspberrypi:~/tf/deepdream_highres $ pip install tensorflow

Collecting tensorflow

Downloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.9.0-cp27-none-linux_armv7l.whl (66.7MB)

100% |████████████████████████████████| 66.7MB 1.1kB/s

...

Installing collected packages: termcolor, six, setuptools, protobuf, absl-py, enum34, futures, grpcio, gast, numpy, markdown, werkzeug, wheel, tensorboard, backports.weakref, astor, funcsigs, pbr, mock, tensorflow

Successfully installed absl-py-0.3.0 astor-0.7.1 backports.weakref-1.0.post1 enum34-1.1.6 funcsigs-1.0.2 futures-3.2.0 gast-0.2.0 grpcio-1.14.1 markdown-2.6.11 mock-2.0.0 numpy-1.15.0 pbr-4.2.0 protobuf-3.6.0 setuptools-39.1.0 six-1.11.0 tensorboard-1.9.0 tensorflow-1.9.0 termcolor-1.1.0 werkzeug-0.14.1 wheel-0.31.1

HelloTensorFlow.py

# Python

import tensorflow as tf

tf.enable_eager_execution()

hello = tf.constant('Hello, TensorFlow !')

print(hello)

pi@raspberrypi:~/bazel $ python3

Python 3.5.3 (default, Jan 19 2017, 14:11:04)

[GCC 6.3.0 20170124] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

>>> tf.enable_eager_execution()

>>> hello = tf.constant('Hello, TensorFlow !')

>>> print(hello)

tf.Tensor(b'Hello, TensorFlow !', shape=(), dtype=string)

>>> quit()

pi@raspberrypi:~/bazel $ pip3 install tensorflow==1.3.0

Collecting tensorflow==1.3.0

Could not find a version that satisfies the requirement tensorflow==1.3.0 (from versions: 0.11.0, 1.8.0, 1.9.0)

No matching distribution found for tensorflow==1.3.0

pi@raspberrypi:~/bazel $ pip3 install tensorflow==1.8.0

Collecting tensorflow==1.8.0

Downloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.8.0-cp35-none-linux_armv7l.whl (67.0MB)

1.8.0でも同じ compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5が発生する。

pi@raspberrypi:~/bazel $ python3

Python 3.5.3 (default, Jan 19 2017, 14:11:04)

[GCC 6.3.0 20170124] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

>>> tf.enable_eager_execution()

>>> hello = tf.constant('Hello, TensorFlow !')

>>> print(hello)

tf.Tensor(b'Hello, TensorFlow !', shape=(), dtype=string)

>>> quit()

pi@raspberrypi:~ $ pip install tensorflow # Python 2.7

Collecting tensorflow

Downloading https://www.piwheels.org/simple/tensorflow/tensorflow-1.9.0-cp27-none-linux_armv7l.whl (66.7MB)

99% |████████████████████████████████| 66.7MB 401kB/s eta 0:00:01Exception:

Traceback (most recent call last):

File "/usr/lib/python2.7/dist-packages/pip/basecommand.py", line 215, in main

status = self.run(options, args)

File "/usr/lib/python2.7/dist-packages/pip/commands/install.py", line 353, in run

wb.build(autobuilding=True)

File "/usr/lib/python2.7/dist-packages/pip/wheel.py", line 749, in build

self.requirement_set.prepare_files(self.finder)

...

File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/controller.py", line 275, in cache_response

self.serializer.dumps(request, response, body=body),

File "/usr/share/python-wheels/CacheControl-0.11.7-py2.py3-none-any.whl/cachecontrol/serialize.py", line 87, in dumps

).encode("utf8"),

MemoryError

● Python 2.7 pip install tensorflowで MemoryErrorが出る場合は Swap領域を増やす

Swap領域を 100MBから 2GBに増やす。

sudo sed -i -e "s/^CONF_SWAPSIZE=.*/CONF_SWAPSIZE=2048/g" /etc/dphys-swapfile

cat /etc/dphys-swapfile | grep CONF_SWAPSIZE

# CONF_SWAPSIZE=2048

sudo service dphys-swapfile restart

free

total used free shared buff/cache available

Mem: 1000184 30080 923984 12856 46120 915968

Swap: 2097148 0 2097148

● Python 2の場合の TensorFlowのランタイム警告

/home/pi/.local/lib/python2.7/site-packages/tensorflow/python/framework/tensor_util.py:32: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

pi@raspberrypi:~ $ python

Python 2.7.13 (default, Nov 24 2017, 17:33:09)

[GCC 6.3.0 20170516] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

/home/pi/.local/lib/python2.7/site-packages/tensorflow/python/framework/tensor_util.py:32: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

from tensorflow.python.framework import fast_tensor_util

>>> tf.enable_eager_execution()

>>> hello = tf.constant('Hello, TensorFlow !')

>>> print(hello)

tf.Tensor(Hello, TensorFlow !, shape=(), dtype=string)

>>> quit()

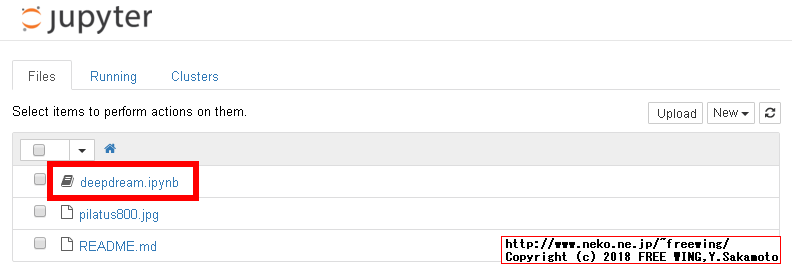

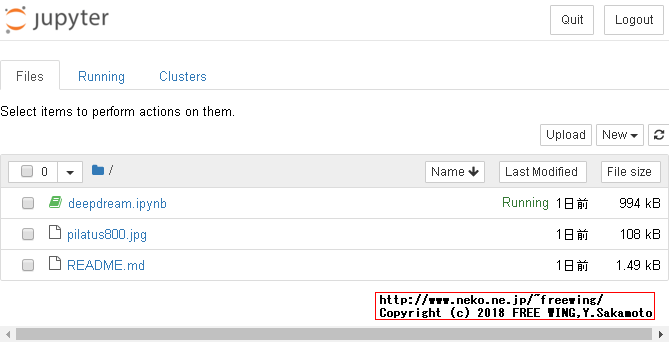

● Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

あらかじめ IPython Notebook(Jupyter Notebook)をラズパイに入れます。

# TensorFlowのサンプルをダウンロードするので Gitから Cloneでダウンロードします

cd

git clone https://github.com/tensorflow/tensorflow.git --depth 1

cd tensorflow

cd tensorflow/examples/tutorials/deepdream/

ls -l

# -rw-r--r-- 1 pi pi 993670 Aug 10 09:25 deepdream.ipynb

# -rw-r--r-- 1 pi pi 108340 Aug 10 09:25 pilatus800.jpg

# -rw-r--r-- 1 pi pi 1493 Aug 10 09:25 README.md

# deepdream.ipynbスクリプトを「信頼」する

ipython3 trust deepdream.ipynb

# Signing notebook: deepdream.ipynb

# IPythonを実行する

ipython3 notebook

# IPythonの HTTPサーバを外部からもアクセスできる様に IP制限をしない

# IPythonを起動時にブラウザを起動しない(そもそもコマンドラインなのでブラウザを起動できない)

ipython3 notebook --ip=* --no-browser

パソコンのブラウザで

http://{ラズパイの IPアドレス}:8888

にアクセスする。

deepdream.ipynb

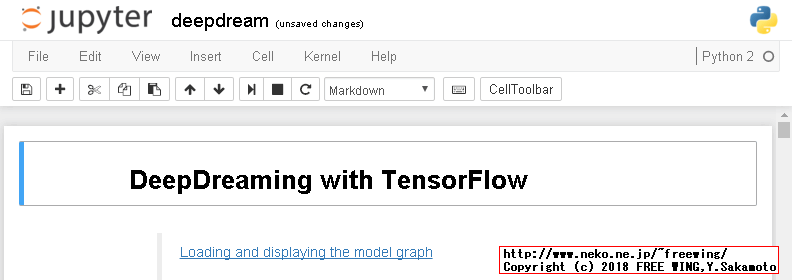

ファイルをクリックする。

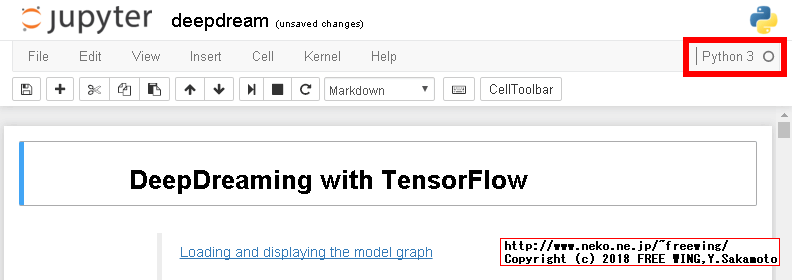

(画面右上の Pythonのバージョンが Python 2の場合は Python 3に変更する)

※ TensorFlowの deepdream.ipynbスクリプトは Python 2では動かない。

IPythonのカーネルを Python 3に変更しても In [5]を実行した直後に「Kernel Restarting The kernel appears to have died .」となり完全に実行できませんでした。

NameError: name 'graph' is not defined

pip3 install igraph

NameError: name 'np' is not defined

pip3 install numpy

ImportError: libf77blas.so.3: cannot open shared object file: No such file or directory

sudo apt-get -y install libatlas-base-dev

ImportError: No module named 'tensorflow'

Kernel Restarting

The kernel appears to have died. It will restart automatically.

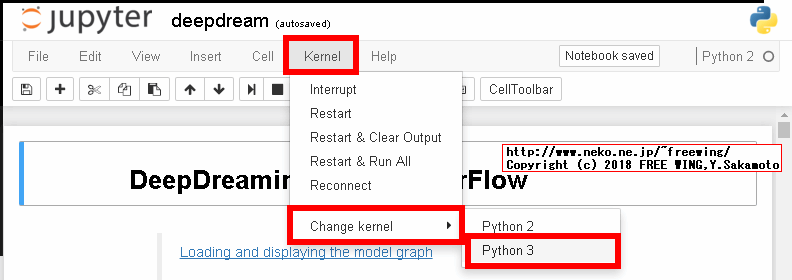

● IPython Notebookで動く Pythonのバージョン(カーネル)を変更する方法

ipynbスクリプトファイルを開いた画面の上部メニューで、

・Kernel

・Change kernel

・Python 2または Python 3を選ぶ

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

● Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る IPython Notebook

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

Kernel Restarting

The kernel appears to have died. It will restart automatically.

● Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

あらかじめ IPython Notebook(Jupyter Notebook)をラズパイに入れます。

cd

git clone https://github.com/tensorflow/tensorflow.git --depth 1

cd tensorflow

cd tensorflow/examples/tutorials/deepdream/

ls -l

# -rw-r--r-- 1 pi pi 993670 Aug 10 09:25 deepdream.ipynb

# -rw-r--r-- 1 pi pi 108340 Aug 10 09:25 pilatus800.jpg

# -rw-r--r-- 1 pi pi 1493 Aug 10 09:25 README.md

# deepdream.ipynbスクリプトを「信頼」する

ipython trust deepdream.ipynb

# IPythonを実行する

ipython notebook

# IPythonの HTTPサーバを外部からもアクセスできる様に IP制限をしない

# IPythonを起動時にブラウザを起動しない(そもそもコマンドラインなのでブラウザを起動できない)

ipython notebook --ip=* --no-browser

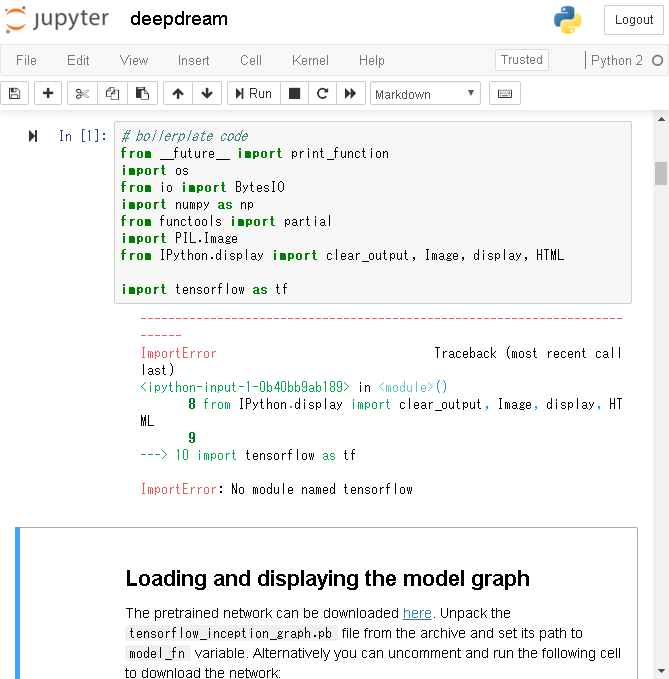

# boilerplate code

from __future__ import print_function

import os

from io import BytesIO

import numpy as np

from functools import partial

import PIL.Image

from IPython.display import clear_output, Image, display, HTML

import tensorflow as tf

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-1-0b40bb9ab189> in <module>()

8 from IPython.display import clear_output, Image, display, HTML

9

---> 10 import tensorflow as tf

ImportError: No module named tensorflow

IPythonが Python 2で動いているので Python 2用の TensorFlowをインストールします。

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

・Raspberry Piで TensorFlowを使って Google DeepDreamで悪夢を見る

pi@raspberrypi:~ $ pip install tensorflow # Python 2.7

...

Using cached https://files.pythonhosted.org/packages/8c/10/79282747f9169f21c053c562a0baa21815a8c7879be97abd930dbcf862e8/setuptools-39.1.0-py2.py3-none-any.whl

Collecting mock>=2.0.0 (from tensorflow)

Exception:

Traceback (most recent call last):

File "/usr/lib/python2.7/dist-packages/pip/basecommand.py", line 215, in main

status = self.run(options, args)

File "/usr/lib/python2.7/dist-packages/pip/commands/install.py", line 353, in run

wb.build(autobuilding=True)

...

File "/usr/share/python-wheels/urllib3-1.19.1-py2.py3-none-any.whl/urllib3/connectionpool.py", line 643, in urlopen

_stacktrace=sys.exc_info()[2])

File "/usr/share/python-wheels/urllib3-1.19.1-py2.py3-none-any.whl/urllib3/util/retry.py", line 315, in increment

total -= 1

TypeError: unsupported operand type(s) for -=: 'Retry' and 'int'

● TypeError: unsupported operand type(s) for -=: 'Retry' and 'int'

は、pipコマンドが必要なファイルを取得するサーバが接続不良だったりネットワーク環境が悪い場合に発生するらしい。

もしくは pipコマンドが古い場合でも発生すると言う話なので、とりあえず pipコマンドをアップデートしておくのが良いと思います。

# pip installで unsupported operandエラーが出る場合の対処方法 pipを upgrade

sudo python -m pip install --upgrade --force setuptools

sudo python -m pip install --upgrade --force pip

● IPythonで dream.ipynbを署名

pi@raspberrypi:~/deepdream $ ipython trust dream.ipynb

[TerminalIPythonApp] WARNING | Subcommand `ipython trust` is deprecated and will be removed in future versions.

[TerminalIPythonApp] WARNING | You likely want to use `jupyter trust` in the future

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/usr/lib/python2.7/dist-packages/traitlets/config/application.py", line 657, in launch_instance

...

File "/usr/lib/python2.7/dist-packages/traitlets/config/application.py", line 445, in initialize_subcommand

subapp = import_item(subapp)

File "/usr/lib/python2.7/dist-packages/ipython_genutils/importstring.py", line 31, in import_item

module = __import__(package, fromlist=[obj])

ImportError: No module named nbformat.sign

sudo pip install jupyter_kernel_gateway

ImportError: No module named shutil_get_terminal_size

pip install backports.shutil_get_terminal_size

import backports

print(backports)

pi@raspberrypi:~/deepdream $ ipython3 trust dream.ipynb

[TerminalIPythonApp] WARNING | Subcommand `ipython trust` is deprecated and will be removed in future versions.

[TerminalIPythonApp] WARNING | You likely want to use `jupyter trust` in the future

[TrustNotebookApp] Writing notebook-signing key to /home/pi/.local/share/jupyter/notebook_secret

Signing notebook: dream.ipynb

● TensorFlowを Python版の DeepDreamで動かしたい場合 hjptriplebee/deep_dream_tensorflow版

※ Bus errorで動きませんでした。RuntimeWarningと関係有るのかな?

hjptriplebee/deep_dream_tensorflow

An implement of google deep dream with tensorflow

cd

git clone https://github.com/hjptriplebee/deep_dream_tensorflow.git

cd deep_dream_tensorflow

python3 main.py --input nature_image.jpg --output nature_image_tf.jpg

python3 main.py --input paint.jpg --output paint_tf.jpg

pi@raspberrypi:~/deep_dream_tensorflow $ python3 main.py --input nature_image.jpg --output nature_image_tf.jpg

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

octave num 1/4...

Bus error

ImportError: No module named 'cv2'

sudo pip3 install opencv-python

# Successfully installed opencv-python-3.4.2.17

from .cv2 import *

ImportError: libjasper.so.1: cannot open shared object file: No such file or directory

sudo apt-get -y install libjasper-dev

# Setting up libjasper1:armhf (1.900.1-debian1-2.4+deb8u1) ...

# Setting up libjasper-dev (1.900.1-debian1-2.4+deb8u1) ...

sudo apt-get -y install libatlas-base-dev

sudo apt-get -y install libqtgui4

sudo apt-get -y install python3-pyqt5

# Setting up python3-pyqt5 (5.7+dfsg-5) ...

Bus error

解決方法不明

● TensorFlowを Python版の DeepDreamで動かしたい場合 EdCo95/deepdream版

※ Bus errorで動きませんでした。RuntimeWarningと関係有るのかな?

EdCo95/deepdream

Implementation of the DeepDream computer vision algorithm in TensorFlow, following the guide on the TensorFlow repo.

cd

mkdir tf

cd tf

git clone https://github.com/EdCo95/deepdream.git

cd deepdream

pi@raspberrypi:~/tf/deepdream $ python simple_dreaming.py

File "simple_dreaming.py", line 70

print(score, end=" ")

^

SyntaxError: invalid syntax

python3 simple_dreaming.py

Bus error

pi@raspberrypi:~/tf/deepdream $ python3 simple_dreaming.py

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

Bus error

Bus error

解決方法不明

● TensorFlowを Python版の DeepDreamで動かしたい場合 mtyka/deepdream_highres版

※ Pythonスクリプトと TensorFlowのバージョン不一致で動きませんでした。

Pythonスクリプト = Python2

TensorFlowバイナリ = Python3

Pythonって 2と 3とが両方とも現役で存在していて、互換性が無い部分が有るからサンプルを拾って動かすだけの私にはとてもつらい!(Swiftも同様にバージョン間での互換性がバッサリ、もっとも Swiftは作者がオカシイ。)

mtyka/deepdream_highres

Deepdream in Tensorflow for highres images

cd

mkdir tf

cd tf

git clone https://github.com/mtyka/deepdream_highres.git

cd deepdream_highres

wget https://storage.googleapis.com/download.tensorflow.org/models/inception5h.zip

unzip inception5h.zip

wget https://github.com/google/deepdream/raw/master/flowers.jpg

# Python 2

python deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

ImportError: No module named tensorflow

# Python 3

python3 deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

SyntaxError: Missing parentheses in call to 'print'

SyntaxError: Missing parentheses in call to 'print'

print "hoge"

to

print("hoge")

● pip install tensorflowで Python 2用の Tensorflowを入れて動かした結果

※ Bus errorで動きませんでした。RuntimeWarningと関係有るのかな?

RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

pi@raspberrypi:~/tf/deepdream_highres $ python deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

/home/pi/.local/lib/python2.7/site-packages/tensorflow/python/framework/tensor_util.py:32: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

from tensorflow.python.framework import fast_tensor_util

--- Available Layers: ---

import/conv2d0_w Features/Channels: 64

import/conv2d0_b Features/Channels: 64

import/conv2d1_w Features/Channels: 64

...

import/softmax1_b Features/Channels: 1008

import/softmax2_w Features/Channels: 1008

import/softmax2_b Features/Channels: 1008

...

import/mixed4d_3x3_bottleneck_pre_relu Features/Channels: 144

import/mixed4d_3x3_bottleneck Features/Channels: 144

import/mixed4d_3x3_pre_relu/conv Features/Channels: 288

...

import/mixed5b_3x3_bottleneck_pre_relu/conv Features/Channels: 192

import/mixed5b_3x3_bottleneck_pre_relu Features/Channels: 192

import/mixed5b_3x3_bottleneck Features/Channels: 192

...

import/output Features/Channels: 1008

import/output1 Features/Channels: 1008

import/output2 Features/Channels: 1008

Number of layers 360

Total number of feature channels: 87322

Chosen layer:

name: "import/mixed5a_5x5_pre_relu"

op: "BiasAdd"

input: "import/mixed5a_5x5_pre_relu/conv"

input: "import/mixed5a_5x5_b"

device: "/device:CPU:0"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "data_format"

value {

s: "NHWC"

}

}

Cycle 0 Res: (240, 320, 3)

Octave: 0 Res: (10, 14, 3)

Bus error

●何でも良いから TensorFlowを動かしたい場合

Get Started with TensorFlow

● TensorFlowで数字(文字画像認識)の学習

cd

cd tensorflow/

cd tensorflow/examples/tutorials/mnist/

pi@raspberrypi:~/tensorflow/tensorflow/examples/tutorials/mnist $ python fully_connected_feed.py

Traceback (most recent call last):

File "fully_connected_feed.py", line 28, in <module>

import tensorflow as tf

ImportError: No module named tensorflow

python3 fully_connected_feed.py

pi@raspberrypi:~/tensorflow/tensorflow/examples/tutorials/mnist $ python3 fully_connected_feed.py

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

WARNING:tensorflow:From fully_connected_feed.py:120: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

WARNING:tensorflow:From /home/pi/.local/lib/python3.5/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please write your own downloading logic.

WARNING:tensorflow:From /home/pi/.local/lib/python3.5/site-packages/tensorflow/contrib/learn/python/learn/datasets/base.py:252: _internal_retry.<locals>.wrap.<locals>.wrapped_fn (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please use urllib or similar directly.

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.

WARNING:tensorflow:From /home/pi/.local/lib/python3.5/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting /tmp/tensorflow/mnist/input_data/train-images-idx3-ubyte.gz

Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.

WARNING:tensorflow:From /home/pi/.local/lib/python3.5/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting /tmp/tensorflow/mnist/input_data/train-labels-idx1-ubyte.gz

Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.

Extracting /tmp/tensorflow/mnist/input_data/t10k-images-idx3-ubyte.gz

Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.

Extracting /tmp/tensorflow/mnist/input_data/t10k-labels-idx1-ubyte.gz

WARNING:tensorflow:From /home/pi/.local/lib/python3.5/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

Step 0: loss = 2.30 (0.664 sec)

Step 100: loss = 2.21 (0.012 sec)

Step 200: loss = 1.96 (0.012 sec)

Step 300: loss = 1.66 (0.011 sec)

Step 400: loss = 1.48 (0.011 sec)

Step 500: loss = 1.09 (0.011 sec)

Step 600: loss = 0.97 (0.014 sec)

Step 700: loss = 0.73 (0.012 sec)

Step 800: loss = 0.67 (0.013 sec)

Step 900: loss = 0.81 (0.012 sec)

Training Data Eval:

Num examples: 55000 Num correct: 47249 Precision @ 1: 0.8591

Validation Data Eval:

Num examples: 5000 Num correct: 4333 Precision @ 1: 0.8666

Test Data Eval:

Num examples: 10000 Num correct: 8609 Precision @ 1: 0.8609

Step 1000: loss = 0.53 (0.054 sec)

Step 1100: loss = 0.54 (0.342 sec)

Step 1200: loss = 0.42 (0.012 sec)

Step 1300: loss = 0.53 (0.013 sec)

Step 1400: loss = 0.49 (0.012 sec)

Step 1500: loss = 0.42 (0.015 sec)

Step 1600: loss = 0.35 (0.015 sec)

Step 1700: loss = 0.34 (0.012 sec)

Step 1800: loss = 0.49 (0.013 sec)

Step 1900: loss = 0.25 (0.015 sec)

Training Data Eval:

Num examples: 55000 Num correct: 49152 Precision @ 1: 0.8937

Validation Data Eval:

Num examples: 5000 Num correct: 4500 Precision @ 1: 0.9000

Test Data Eval:

Num examples: 10000 Num correct: 8995 Precision @ 1: 0.8995

● TensorFlowで語句(テキスト文章)の学習

cd

cd tensorflow/

cd tensorflow/examples/tutorials/word2vec

python3 word2vec_basic.py

pi@raspberrypi:~/tensorflow/tensorflow/examples/tutorials/word2vec $ ls -l

-rw-r--r-- 1 pi pi 429 Aug 12 19:41 BUILD

-rw-r--r-- 1 pi pi 0 Aug 12 19:41 __init__.py

-rw-r--r-- 1 pi pi 12795 Aug 12 19:41 word2vec_basic.py

pi@raspberrypi:~/tensorflow/tensorflow/examples/tutorials/word2vec $ python3 word2vec_basic.py

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

Found and verified text8.zip

Data size 17005207

Most common words (+UNK) [['UNK', 418391], ('the', 1061396), ('of', 593677), ('and', 416629), ('one', 411764)]

Sample data [5242, 3082, 12, 6, 195, 2, 3137, 46, 59, 156] ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against']

3082 originated -> 5242 anarchism

3082 originated -> 12 as

12 as -> 6 a

12 as -> 3082 originated

6 a -> 195 term

6 a -> 12 as

195 term -> 2 of

195 term -> 6 a

Initialized

Average loss at step 0 : 317.18463134765625

Nearest to an: initiator, chinese, quadriceps, marwan, manchurian, frontline, federline, townspeople,

Nearest to more: thessalonica, abstractions, forensics, daimlerchrysler, aficionados, ideas, third, inaccuracies,

Nearest to be: fuerza, micrometers, ought, carpets, lao, conductivity, inference, gardeners,

Nearest to over: ramos, discussed, emigrate, terms, nchen, slough, tryptophan, battlefield,

Nearest to at: pls, manifests, intensive, basque, thoroughbreds, olav, mania, conveyor,

Nearest to first: thunderbirds, geo, supposes, disability, calendars, cindy, recipient, leeds,

Nearest to to: glycemic, impractical, prototype, ller, dramatically, cetacean, promo, flower,

Nearest to he: celebrities, generalisation, pines, bixby, emulators, import, floated, cmf,

Nearest to some: whitepapers, trowa, russa, antagonist, tunnelling, kitts, lozada, allan,

Nearest to been: icosidodecahedron, taiping, tranquility, contender, dioscorus, mawr, phoenicia, civilised,

Nearest to years: hashes, modernisation, soar, flutie, martens, chinon, said, upcoming,

Nearest to these: chandrasekhar, afar, corporation, woogie, pasolini, knicks, bicycling, handedness,

Nearest to states: pedals, intersecting, letterboxes, marcus, neopaganism, ravenna, drinks, hemorrhagic,

Nearest to united: ray, curran, didgeridoo, zealot, trusteeship, yarns, gur, anything,

Nearest to will: subversion, packaged, mondegreen, coro, onions, giambattista, motivational, moneo,

Nearest to from: nazarbayev, annapolis, sark, overcomes, fish, wadi, wrigley, evaded,

Average loss at step 2000 : 113.80575774669647

Average loss at step 4000 : 52.69416046977043

Average loss at step 6000 : 33.0515801718235

Average loss at step 8000 : 23.336391828536986

Average loss at step 10000 : 17.929039194107055

Nearest to an: the, sedatives, horror, gb, rights, zf, tricks, autism,

Nearest to more: third, deciduous, cc, daughters, deserted, bones, hydride, true,

Nearest to be: conventions, accessories, conductivity, little, revolution, meet, switch, boards,

Nearest to over: discussed, terms, invented, nine, levy, baldwin, hours, determination,

Nearest to at: wasn, pls, absence, in, odds, vocals, on, technische,

Nearest to first: mosque, dynasties, ruled, coke, closer, sender, and, molinari,

Nearest to to: and, in, coke, paradigms, grow, prizes, rogers, trial,

Nearest to he: it, been, starts, table, phi, celebrities, they, and,

Nearest to some: vaginal, incorporate, online, agave, indefinitely, hostility, agincourt, tonight,

Nearest to been: he, phi, grant, levitt, hurricane, coke, llewellyn, lymphoma,

Nearest to years: zero, nine, rotate, said, aberdeenshire, fao, film, boards,

Nearest to these: restricted, corporation, afar, cl, whale, teammate, aberdeenshire, performed,

Nearest to states: pedals, drinks, gb, two, lunar, badges, secretly, mathbf,

Nearest to united: ray, anything, climatic, ac, coke, assistive, omnibus, involving,

Nearest to will: macedonians, gland, amateurs, used, against, serious, archive, shield,

Nearest to from: in, of, and, with, child, der, omega, gland,

Average loss at step 12000 : 14.0876000957489

Average loss at step 14000 : 11.830730155587196

Average loss at step 16000 : 10.117526993513108

Average loss at step 18000 : 8.394923798322678

Average loss at step 20000 : 8.038336719870568

Nearest to an: the, dasyprocta, rights, gb, horror, initiator, sedatives, concern,

Nearest to more: third, deciduous, thessalonica, hydride, sufferers, daughters, bones, operatorname,

Nearest to be: have, conventions, is, conductivity, accessories, was, zealand, are,

Nearest to over: discussed, invented, machined, terms, agouti, levy, determination, five,

Nearest to at: in, and, wasn, on, from, filtration, for, peptide,

Nearest to first: agouti, dynasties, pelagius, equestrian, morphisms, mosque, coke, peptide,

Nearest to to: and, for, nine, with, in, not, of, by,

Nearest to he: it, they, been, she, starts, and, boulevard, also,

Nearest to some: the, many, this, vaginal, circ, peptide, tonight, yin,

Nearest to been: he, is, levitt, long, by, tal, providers, grant,

Nearest to years: operatorname, nine, hello, rotate, zero, seven, aberdeenshire, fao,

Nearest to these: circ, restricted, corporation, afar, the, onyx, often, latinus,

Nearest to states: pedals, intersecting, drinks, gb, circ, two, northwestern, kid,

Nearest to united: antwerp, ray, involving, climatic, anything, matched, assistive, coke,

Nearest to will: against, neologisms, macedonians, onions, xvii, gland, amateurs, ecuador,

Nearest to from: in, and, by, with, antwerp, of, on, for,

Average loss at step 22000 : 7.031585393786431

Average loss at step 24000 : 6.858001116275787

Average loss at step 26000 : 6.695932538747788

Average loss at step 28000 : 6.394147111177444

Average loss at step 30000 : 5.914885578513146

Nearest to an: the, dasyprocta, abiathar, concern, rights, sponsors, initiator, gb,

Nearest to more: deciduous, third, thessalonica, sufferers, hydride, officeholders, cfa, bones,

Nearest to be: have, by, are, was, conventions, accessories, is, conductivity,

Nearest to over: five, invented, discussed, levy, determination, machined, agouti, terms,

Nearest to at: in, from, for, wasn, and, on, with, anthropic,

Nearest to first: agouti, dynasties, pelagius, coke, peptide, equestrian, forerunner, mosque,

Nearest to to: for, with, nine, not, and, in, will, can,

Nearest to he: it, they, she, boulevard, there, starts, no, encryption,

Nearest to some: many, the, vaginal, all, this, circ, agave, tombs,

Nearest to been: by, was, he, long, once, rawlinson, levitt, be,

Nearest to years: six, operatorname, hashes, rotate, leq, versions, exists, io,

Nearest to these: primigenius, circ, restricted, the, afar, corporation, aberdeenshire, often,

Nearest to states: pedals, intersecting, drinks, gb, northwestern, rebounded, circ, ventricular,

Nearest to united: antwerp, ray, earthly, adhd, involving, assaults, matched, assistive,

Nearest to will: to, would, can, against, neologisms, could, ecuador, should,

Nearest to from: in, by, abet, with, antwerp, for, on, at,

Average loss at step 32000 : 5.958182794988155

Average loss at step 34000 : 5.725539566159249

Average loss at step 36000 : 5.798080120563507

Average loss at step 38000 : 5.5125210976600645

Average loss at step 40000 : 5.238765237927437

Nearest to an: the, concern, sponsors, rights, abiathar, tau, pars, initiator,

Nearest to more: deciduous, most, officeholders, biosphere, third, sufferers, thessalonica, cfa,

Nearest to be: have, by, was, were, are, been, accessories, conventions,

Nearest to over: determination, invented, discussed, levy, five, furry, akimbo, terms,

Nearest to at: in, on, from, wasn, six, for, peptide, bckgr,

Nearest to first: agouti, pelagius, forerunner, dynasties, peptide, coke, massif, third,

Nearest to to: nine, will, not, for, can, with, viewers, zero,

Nearest to he: it, she, they, there, who, boulevard, starts, zero,

Nearest to some: many, the, all, vaginal, these, this, circ, agave,

Nearest to been: by, be, was, once, he, long, tal, epistles,

Nearest to years: soar, four, versions, accumulator, operatorname, six, bouvet, rotate,

Nearest to these: restricted, some, afar, circ, primigenius, many, the, corporation,

Nearest to states: pedals, intersecting, drinks, hemorrhagic, gb, ventricular, northwestern, rebounded,

Nearest to united: antwerp, involving, ray, of, earthly, assaults, matched, adhd,

Nearest to will: can, would, to, could, should, against, neologisms, ecuador,

Nearest to from: in, abet, on, with, agouti, antwerp, and, for,

Average loss at step 42000 : 5.3715492960214615

Average loss at step 44000 : 5.232873802542686

Average loss at step 46000 : 5.233447861671448

Average loss at step 48000 : 5.260792678833008

● Raspberry Piで Keras

keras-team/keras

Deep Learning for humans http://keras.io/

Keras The Python Deep Learning library

You have just found Keras.

Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

It was developed with a focus on enabling fast experimentation.

Being able to go from idea to result with the least possible delay is key to doing good research.

pip3なので Python 3系用

pi@raspberrypi:~ $ pip3 install keras

# Installing collected packages: scipy, keras-preprocessing, pyyaml, h5py, keras-applications, keras

上記の pip3 install kerasの前に下記を実行すればインストールの時間短縮ができるはずだが無視される模様

# python3なので Python 3系用

sudo apt-get install python3-pandas

# sudo apt-get install python3-numpy (1:1.12.1-3) ※ Keras 2.2.2は numpy-1.15.0

# sudo apt-get install python3-yaml (3.12-1) ※ Keras 2.2.2は PyYAML-3.13

# sudo apt-get install python3-scipy (0.18.1-2) ※ Keras 2.2.2は scipy-1.1.0

# sudo apt-get install python3-h5py (2.7.0-1) ※ Keras 2.2.2は h5py-2.8.0

pi@raspberrypi:~ $ python3

Python 3.5.3 (default, Jan 19 2017, 14:11:04)

[GCC 6.3.0 20170124] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import keras

Using TensorFlow backend.

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

>>> print(keras.__version__)

2.2.2

>>> quit()

Keras の Githubで Tag 2.2.2にアクセスする。

https://github.com/keras-team/keras/tree/2.2.2

examplesディレクトリから deep_dream.pyをダウンロードする。

wget https://raw.githubusercontent.com/keras-team/keras/2.2.2/examples/deep_dream.py

mkdir src

mkdir dest

wget https://github.com/google/deepdream/raw/master/flowers.jpg

mv flowers.jpg ./src

# Python 3で Kerasする

python3 deep_dream.py src/flowers.jpg dest/flowers

● Python 2では Kerasは動かない

pi@raspberrypi:~ $ python deep_dream.py src/flowers.jpg dest/flowers

Traceback (most recent call last):

File "deep_dream.py", line 14, in <module>

from keras.preprocessing.image import load_img, save_img, img_to_array

ImportError: No module named keras.preprocessing.image

● Python 3で Kerasは動くが Bus errorで deep_dream.pyが落ちた

pi@raspberrypi:~ $ python3 deep_dream.py src/flowers.jpg dest/flowers

Using TensorFlow backend.

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: builtins.type size changed, may indicate binary incompatibility. Expected 432, got 412

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 56, got 52

return f(*args, **kwds)

Downloading data from https://github.com/fchollet/deep-learning-models/releases/download/v0.5/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5

87916544/87910968 [==============================] - 102s 1us/step

Model loaded.

WARNING:tensorflow:Variable += will be deprecated. Use variable.assign_add if you want assignment to the variable value or 'x = x + y' if you want a new python Tensor object.

Processing image shape (122, 163)

Bus error

# pythonなので Python 2系用

sudo apt-get -y install python-pandas

sudo apt-get -y install python-numpy

sudo apt-get -y install python-yaml

sudo apt-get -y install python-scipy

sudo apt-get -y install python-h5py

pip install keras

● Introduction to TensorFlow Lite

TensorFlow Liteとはモバイルデバイス(スマホ)や組み込み機器向けに TensorFlowを軽量化したもの。

Introduction to TensorFlow Lite

tensorflow/tensorflow/contrib/lite/

TensorFlow Lite for Raspberry Pi

● TensorFlow Lite Raspberry Pi Self compile Native compiling

Native compiling

# install the toolchain

sudo apt-get install build-essential

cd

git clone https://github.com/tensorflow/tensorflow

cd tensorflow

./tensorflow/contrib/lite/download_dependencies.sh

./tensorflow/contrib/lite/build_rpi_lib.sh

# compile a static library

# tensorflow/contrib/lite/gen/lib/rpi_armv7/libtensorflow-lite.a

pi@raspberrypi:~/tensorflow $ ./tensorflow/contrib/lite/build_rpi_lib.sh

+ set -e

+++ dirname ./build_rpi_lib.sh

++ cd .

++ pwd

+ SCRIPT_DIR=/home/pi/tensorflow/tensorflow/contrib/lite

+ cd /home/pi/tensorflow/tensorflow/contrib/lite/../../..

+ CC_PREFIX=arm-linux-gnueabihf-

+ make -j 3 -f tensorflow/contrib/lite/Makefile TARGET=RPI TARGET_ARCH=armv7

/bin/sh: 1: [[: not found

arm-linux-gnueabihf-gcc --std=c++11 -O3 -DNDEBUG -march=armv7-a -mfpu=neon-vfpv4 -funsafe-math-optimization

...

./tensorflow/contrib/lite/schema/schema_generated.h:1214:33: error: no matching function for call to ‘flatbuffers::Verifier::Verify(const flatbuffers::Vector<float>*)’

verifier.Verify(min()) &&

pi@raspberrypi:~/tensorflow/tensorflow/contrib/lite $ [[

> ^C

pi@raspberrypi:~/tensorflow/tensorflow/contrib/lite $ /bin/bash -c "type [["

[[ is a shell keyword

pi@raspberrypi:~/tensorflow/tensorflow/contrib/lite $ head ./build_rpi_lib.sh

#!/bin/bash -x

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

#

Bash script: “[[: not found”

test.sh

#!/bin/bash

if [[ "string" == "string" ]]; then

echo This is Bash

fi

$ readlink -f $(which sh)

/bin/dash

$ sh test.sh

test.sh: 2: test.sh: [[: not found

$ bash test.sh

This is Bash

$ chmod 755 test.sh

$ ./test.sh

This is Bash

● FlatBuffersをビルドしてインストール後

pi@raspberrypi:~/tensorflow $ ./tensorflow/contrib/lite/build_rpi_lib.sh

...

./tensorflow/contrib/lite/schema/schema_generated.h:4471:39: error: no matching function for call to ‘flatbuffers::Verifier::Verify(const flatbuffers::Vector<flatbuffers::Offset<tflite::SubGraph> >*)’

verifier.Verify(subgraphs()) &&

...

./tensorflow/contrib/lite/schema/schema_generated.h:4474:41: error: no matching function for call to ‘flatbuffers::Verifier::Verify(const flatbuffers::String*)’

verifier.Verify(description()) &&

...

./tensorflow/contrib/lite/schema/schema_generated.h:4476:37: error: no matching function for call to ‘flatbuffers::Verifier::Verify(const flatbuffers::Vector<flatbuffers::Offset<tflite::Buffer> >*)’

verifier.Verify(buffers()) &&

...

+ pwd

/home/pi/tensorflow

CC_PREFIX=arm-linux-gnueabihf- make -j 1 -f tensorflow/contrib/lite/Makefile TARGET=RPI TARGET_ARCH=armv7

mv /home/pi/tensorflow/tensorflow/contrib/lite/downloads/flatbuffers/ /home/pi/tensorflow/tensorflow/contrib/lite/downloads/flatbuffers_/

#

mkdir /home/pi/tensorflow/tensorflow/contrib/lite/downloads/flatbuffers

mkdir /home/pi/tensorflow/tensorflow/contrib/lite/downloads/flatbuffers/include

cp -r /usr/local/include/flatbuffers /home/pi/tensorflow/tensorflow/contrib/lite/downloads/flatbuffers/include/

● FlatBuffers

FlatBuffers

FlatBuffers google/flatbuffers

cd

git clone https://github.com/google/flatbuffers.git

cd flatbuffers

cmake -G "Unix Makefiles" -DCMAKE_BUILD_TYPE=Release

make

sudo make install

pi@raspberrypi:~/flatbuffers $ cmake -G "Unix Makefiles" -DCMAKE_BUILD_TYPE=Release

-- The C compiler identification is GNU 6.3.0

-- The CXX compiler identification is GNU 6.3.0

-- Check for working C compiler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Configuring done

-- Generating done

-- Build files have been written to: /home/pi/flatbuffers

pi@raspberrypi:~/flatbuffers $ make

Scanning dependencies of target flatc

[ 1%] Building CXX object CMakeFiles/flatc.dir/src/code_generators.cpp.o

[ 3%] Building CXX object CMakeFiles/flatc.dir/src/idl_parser.cpp.o

[ 5%] Building CXX object CMakeFiles/flatc.dir/src/idl_gen_text.cpp.o

[ 7%] Building CXX object CMakeFiles/flatc.dir/src/reflection.cpp.o

[ 9%] Building CXX object CMakeFiles/flatc.dir/src/util.cpp.o

...

Scanning dependencies of target flathash

[ 98%] Building CXX object CMakeFiles/flathash.dir/src/flathash.cpp.o

[100%] Linking CXX executable flathash

[100%] Built target flathash

pi@raspberrypi:~/flatbuffers $ sudo make install

[ 45%] Built target flatc

[ 62%] Built target flattests

[ 68%] Built target flatsamplebinary

[ 84%] Built target flatsampletext

[ 96%] Built target flatbuffers

[100%] Built target flathash

Install the project...

-- Install configuration: "Release"

-- Installing: /usr/local/include/flatbuffers

-- Installing: /usr/local/include/flatbuffers/reflection.h

-- Installing: /usr/local/include/flatbuffers/util.h

-- Installing: /usr/local/include/flatbuffers/hash.h

-- Installing: /usr/local/include/flatbuffers/flatbuffers.h

-- Installing: /usr/local/include/flatbuffers/code_generators.h

-- Installing: /usr/local/include/flatbuffers/stl_emulation.h

-- Installing: /usr/local/include/flatbuffers/grpc.h

-- Installing: /usr/local/include/flatbuffers/reflection_generated.h

-- Installing: /usr/local/include/flatbuffers/registry.h

-- Installing: /usr/local/include/flatbuffers/idl.h

-- Installing: /usr/local/include/flatbuffers/base.h

-- Installing: /usr/local/include/flatbuffers/minireflect.h

-- Installing: /usr/local/include/flatbuffers/flatc.h

-- Installing: /usr/local/include/flatbuffers/flexbuffers.h

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatbuffersConfig.cmake

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatbuffersConfigVersion.cmake

-- Installing: /usr/local/lib/libflatbuffers.a

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatbuffersTargets.cmake

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatbuffersTargets-release.cmake

-- Installing: /usr/local/bin/flatc

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatcTargets.cmake

-- Installing: /usr/local/lib/cmake/flatbuffers/FlatcTargets-release.cmake

● Raspberry Piで TensorFlowをソースリストからビルドする

・2018/08/18

Raspberry Piで Google謹製の Bazelビルドツールをビルドして TensorFlowを自己ビルドする方法

ラズパイで Google謹製の Bazelビルドツールをビルドして TensorFlowを自己ビルドする方法

・2018/08/18

Raspberry Piで TensorFlow Deep Learning Frameworkを自己ビルドする方法

ラズパイで TensorFlow Deep Learning Frameworkを自己ビルドする方法

● Raspberry Piで TensorFlowをソースリストからビルドする

Installing TensorFlow on Raspbian

over 24 hours to build

Installing TensorFlow from Sources

Ubuntu

cd

git clone https://github.com/tensorflow/tensorflow

cd tensorflow

# where Branch is the desired branch

git checkout r1.7

# Python 2.7

sudo apt-get install python-numpy python-dev python-pip python-wheel

# Python 3.n

sudo apt-get install python3-numpy python3-dev python3-pip python3-wheel

./configure

pi@raspberrypi:~/tensorflow $ ./configure

Cannot find bazel. Please install bazel.

Configuration finished

pi@raspberrypi:~/tensorflow $ sudo apt-get install bazel

Reading package lists... Done

Building dependency tree

Reading state information... Done

E: Unable to locate package bazel

pi@raspberrypi:~/tensorflow $ apt-cache search bazel

(bazelパッケージが無い)

bazel

cd

git clone https://github.com/bazelbuild/bazel.git --depth 1

cd bazel

# scripts/bootstrap/compile.sh

export BAZEL_JAVAC_OPTS="-J-Xmx500M"

scripts/bootstrap/compile.sh

# Use BAZEL_JAVAC_OPTS to pass additional arguments to javac, e.g.,

# export BAZEL_JAVAC_OPTS="-J-Xmx2g -J-Xms200m"

# Useful if your system chooses too small of a max heap for javac.

# We intentionally rely on shell word splitting to allow multiple

# additional arguments to be passed to javac.

run "${JAVAC}" -classpath "${classpath}" -sourcepath "${sourcepath}" \

-d "${output}/classes" -source "$JAVA_VERSION" -target "$JAVA_VERSION" \

-encoding UTF-8 ${BAZEL_JAVAC_OPTS} "@${paramfile}"

pi@raspberrypi:~/bazel $ ./compile.sh

🍃 Building Bazel from scratch

ERROR: Must specify PROTOC if not bootstrapping from the distribution artifact

pi@raspberrypi:~/bazel $ ls -l /usr/bin/protoc

-rwxr-xr-x 1 root root 9856 Nov 25 2016 /usr/bin/protoc

export PROTOC=/usr/bin/protoc

pi@raspberrypi:~/bazel $ ./compile.sh

Building Bazel from scratch

ERROR: Must specify GRPC_JAVA_PLUGIN if not bootstrapping from the distribution artifact

GRPC_JAVA_PLUGIN ?

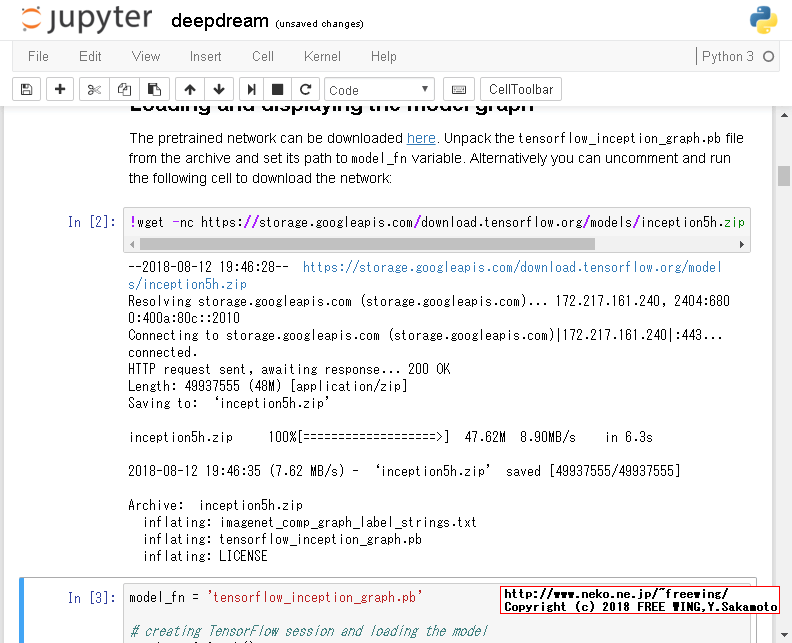

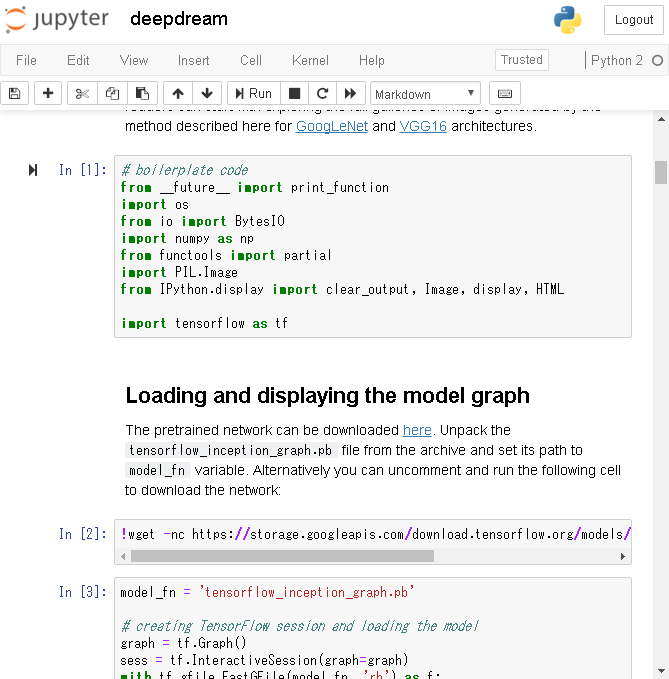

● DeepDreaming with TensorFlow

# boilerplate code

from __future__ import print_function

import os

from io import BytesIO

import numpy as np

from functools import partial

import PIL.Image

from IPython.display import clear_output, Image, display, HTML

import tensorflow as tf

!wget -nc https://storage.googleapis.com/download.tensorflow.org/models/inception5h.zip && unzip -n inception5h.zip

model_fn = 'tensorflow_inception_graph.pb'

# creating TensorFlow session and loading the model

graph = tf.Graph()

sess = tf.InteractiveSession(graph=graph)

with tf.gfile.FastGFile(model_fn, 'rb') as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

t_input = tf.placeholder(np.float32, name='input') # define the input tensor

imagenet_mean = 117.0

t_preprocessed = tf.expand_dims(t_input-imagenet_mean, 0)

tf.import_graph_def(graph_def, {'input':t_preprocessed})

layers = [op.name for op in graph.get_operations() if op.type=='Conv2D' and 'import/' in op.name]

feature_nums = [int(graph.get_tensor_by_name(name+':0').get_shape()[-1]) for name in layers]

print('Number of layers', len(layers))

print('Total number of feature channels:', sum(feature_nums))

# Helper functions for TF Graph visualization

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add()

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = tf.compat.as_bytes("<stripped %d bytes>"%size)

return strip_def

def rename_nodes(graph_def, rename_func):

res_def = tf.GraphDef()

for n0 in graph_def.node:

n = res_def.node.add()

n.MergeFrom(n0)

n.name = rename_func(n.name)

for i, s in enumerate(n.input):

n.input[i] = rename_func(s) if s[0]!='^' else '^'+rename_func(s[1:])

return res_def

def show_graph(graph_def, max_const_size=32):

"""Visualize TensorFlow graph."""

if hasattr(graph_def, 'as_graph_def'):

graph_def = graph_def.as_graph_def()

strip_def = strip_consts(graph_def, max_const_size=max_const_size)

code = """

<script>

function load() {{

document.getElementById("{id}").pbtxt = {data};

}}

</script>

<link rel="import" href="https://tensorboard.appspot.com/tf-graph-basic.build.html" onload=load()>

<div style="height:600px">

<tf-graph-basic

id="{id}"

></tf-graph-basic>

</div>

""".format(data=repr(str(strip_def)), id='graph'+str(np.random.rand()))

iframe = """

<iframe seamless style="width:800px;height:620px;border:0"

srcdoc="{}"

></iframe>

""".format(code.replace('"', '"'))

display(HTML(iframe))

# Visualizing the network graph. Be sure expand the "mixed" nodes to see their

# internal structure. We are going to visualize "Conv2D" nodes.

tmp_def = rename_nodes(graph_def, lambda s:"/".join(s.split('_',1)))

show_graph(tmp_def)

# Picking some internal layer. Note that we use outputs before applying the ReLU nonlinearity

# to have non-zero gradients for features with negative initial activations.

layer = 'mixed4d_3x3_bottleneck_pre_relu'

channel = 139 # picking some feature channel to visualize

# start with a gray image with a little noise

img_noise = np.random.uniform(size=(224,224,3)) + 100.0

def showarray(a, fmt='jpeg'):

a = np.uint8(np.clip(a, 0, 1)*255)

f = BytesIO()

PIL.Image.fromarray(a).save(f, fmt)

display(Image(data=f.getvalue()))

def visstd(a, s=0.1):

'''Normalize the image range for visualization'''

return (a-a.mean())/max(a.std(), 1e-4)*s + 0.5

def T(layer):

'''Helper for getting layer output tensor'''

return graph.get_tensor_by_name("import/%s:0"%layer)

def render_naive(t_obj, img0=img_noise, iter_n=20, step=1.0):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

img = img0.copy()

for i in range(iter_n):

g, score = sess.run([t_grad, t_score], {t_input:img})

# normalizing the gradient, so the same step size should work

g /= g.std()+1e-8 # for different layers and networks

img += g*step

print(score, end = ' ')

clear_output()

showarray(visstd(img))

render_naive(T(layer)[:,:,:,channel])

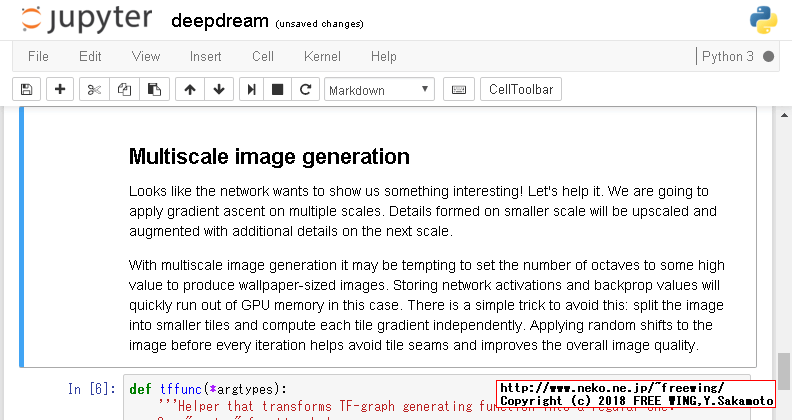

def tffunc(*argtypes):

'''Helper that transforms TF-graph generating function into a regular one.

See "resize" function below.

'''

placeholders = list(map(tf.placeholder, argtypes))

def wrap(f):

out = f(*placeholders)

def wrapper(*args, **kw):

return out.eval(dict(zip(placeholders, args)), session=kw.get('session'))

return wrapper

return wrap

# Helper function that uses TF to resize an image

def resize(img, size):

img = tf.expand_dims(img, 0)

return tf.image.resize_bilinear(img, size)[0,:,:,:]

resize = tffunc(np.float32, np.int32)(resize)

def calc_grad_tiled(img, t_grad, tile_size=512):

'''Compute the value of tensor t_grad over the image in a tiled way.

Random shifts are applied to the image to blur tile boundaries over

multiple iterations.'''

sz = tile_size

h, w = img.shape[:2]

sx, sy = np.random.randint(sz, size=2)

img_shift = np.roll(np.roll(img, sx, 1), sy, 0)

grad = np.zeros_like(img)

for y in range(0, max(h-sz//2, sz),sz):

for x in range(0, max(w-sz//2, sz),sz):

sub = img_shift[y:y+sz,x:x+sz]

g = sess.run(t_grad, {t_input:sub})

grad[y:y+sz,x:x+sz] = g

return np.roll(np.roll(grad, -sx, 1), -sy, 0)

def render_multiscale(t_obj, img0=img_noise, iter_n=10, step=1.0, octave_n=3, octave_scale=1.4):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

img = img0.copy()

for octave in range(octave_n):

if octave>0:

hw = np.float32(img.shape[:2])*octave_scale

img = resize(img, np.int32(hw))

for i in range(iter_n):

g = calc_grad_tiled(img, t_grad)

# normalizing the gradient, so the same step size should work

g /= g.std()+1e-8 # for different layers and networks

img += g*step

print('.', end = ' ')

clear_output()

showarray(visstd(img))

render_multiscale(T(layer)[:,:,:,channel])

k = np.float32([1,4,6,4,1])

k = np.outer(k, k)

k5x5 = k[:,:,None,None]/k.sum()*np.eye(3, dtype=np.float32)

def lap_split(img):

'''Split the image into lo and hi frequency components'''

with tf.name_scope('split'):

lo = tf.nn.conv2d(img, k5x5, [1,2,2,1], 'SAME')

lo2 = tf.nn.conv2d_transpose(lo, k5x5*4, tf.shape(img), [1,2,2,1])

hi = img-lo2

return lo, hi

def lap_split_n(img, n):

'''Build Laplacian pyramid with n splits'''

levels = []

for i in range(n):

img, hi = lap_split(img)

levels.append(hi)

levels.append(img)

return levels[::-1]

def lap_merge(levels):

'''Merge Laplacian pyramid'''

img = levels[0]

for hi in levels[1:]:

with tf.name_scope('merge'):

img = tf.nn.conv2d_transpose(img, k5x5*4, tf.shape(hi), [1,2,2,1]) + hi

return img

def normalize_std(img, eps=1e-10):

'''Normalize image by making its standard deviation = 1.0'''

with tf.name_scope('normalize'):

std = tf.sqrt(tf.reduce_mean(tf.square(img)))

return img/tf.maximum(std, eps)

def lap_normalize(img, scale_n=4):

'''Perform the Laplacian pyramid normalization.'''

img = tf.expand_dims(img,0)

tlevels = lap_split_n(img, scale_n)

tlevels = list(map(normalize_std, tlevels))

out = lap_merge(tlevels)

return out[0,:,:,:]

# Showing the lap_normalize graph with TensorBoard

lap_graph = tf.Graph()

with lap_graph.as_default():

lap_in = tf.placeholder(np.float32, name='lap_in')

lap_out = lap_normalize(lap_in)

show_graph(lap_graph)

def render_lapnorm(t_obj, img0=img_noise, visfunc=visstd,

iter_n=10, step=1.0, octave_n=3, octave_scale=1.4, lap_n=4):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

# build the laplacian normalization graph

lap_norm_func = tffunc(np.float32)(partial(lap_normalize, scale_n=lap_n))

img = img0.copy()

for octave in range(octave_n):

if octave>0:

hw = np.float32(img.shape[:2])*octave_scale

img = resize(img, np.int32(hw))

for i in range(iter_n):

g = calc_grad_tiled(img, t_grad)

g = lap_norm_func(g)

img += g*step

print('.', end = ' ')

clear_output()

showarray(visfunc(img))

render_lapnorm(T(layer)[:,:,:,channel])

render_lapnorm(T(layer)[:,:,:,65])

render_lapnorm(T('mixed3b_1x1_pre_relu')[:,:,:,101])

render_lapnorm(T(layer)[:,:,:,65]+T(layer)[:,:,:,139], octave_n=4)

def render_deepdream(t_obj, img0=img_noise,

iter_n=10, step=1.5, octave_n=4, octave_scale=1.4):

t_score = tf.reduce_mean(t_obj) # defining the optimization objective

t_grad = tf.gradients(t_score, t_input)[0] # behold the power of automatic differentiation!

# split the image into a number of octaves

img = img0

octaves = []

for i in range(octave_n-1):

hw = img.shape[:2]

lo = resize(img, np.int32(np.float32(hw)/octave_scale))

hi = img-resize(lo, hw)

img = lo

octaves.append(hi)

# generate details octave by octave

for octave in range(octave_n):

if octave>0:

hi = octaves[-octave]

img = resize(img, hi.shape[:2])+hi

for i in range(iter_n):

g = calc_grad_tiled(img, t_grad)

img += g*(step / (np.abs(g).mean()+1e-7))

print('.',end = ' ')

clear_output()

showarray(img/255.0)

img0 = PIL.Image.open('pilatus800.jpg')

img0 = np.float32(img0)

showarray(img0/255.0)

render_deepdream(tf.square(T('mixed4c')), img0)

render_deepdream(T(layer)[:,:,:,139], img0)

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

●関連するコンテンツ(この記事を読んだ人は、次の記事も読んでいます)

NVIDIA Jetson Nano 開発者キットを買ってみた。メモリ容量 4GB LPDDR4 RAM

Jetson Nanoで TensorFlow PyTorch Caffe/Caffe2 Keras MXNet等を GPUパワーで超高速で動かす!

Raspberry Piでメモリを馬鹿食いするアプリ用に不要なサービスを停止してフリーメモリを増やす方法

ラズパイでメモリを沢山使用するビルドやアプリ用に不要なサービス等を停止して使えるメインメモリを増やす

【成功版】最新版の Darknetに digitalbrain79版の Darknet with NNPACKの NNPACK処理を適用する

ラズパイで NNPACK対応の最新版の Darknetを動かして超高速で物体検出や DeepDreamの悪夢を見る

【成功版】Raspberry Piで NNPACK対応版の Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet NNPACK darknet-nnpackをソースからビルドして物体検出を行なう方法

【成功版】Raspberry Piで Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet Neural Network Frameworkを入れて物体検出や悪夢のグロ画像を生成する

【成功版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法

Raspberry Piで TensorFlow Deep Learning Frameworkを自己ビルドする方法

ラズパイで TensorFlow Deep Learning Frameworkを自己ビルドする方法

Raspberry Piで Caffe Deep Learning Frameworkで物体認識を行なってみるテスト

ラズパイで Caffe Deep Learning Frameworkを動かして物体認識を行なってみる

【ビルド版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning Framework

ラズパイで Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

【インストール版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning

ラズパイで Caffe Deep Learning Frameworkをインストールして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piで Caffe2 Deep Learning Frameworkをソースコードからビルドする方法

ラズパイで Caffe 2 Deep Learning Frameworkをソースコードから自己ビルドする方法

Orange Pi PC 2の 64bitのチカラで DeepDreamしてキモイ絵を高速でモリモリ量産してみるテスト

OrangePi PC2に Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piに Jupyter Notebookをインストールして拡張子 ipynb形式の IPythonを動かす

ラズパイに IPython Notebookをインストールして Google DeepDream dream.ipynbを動かす

Raspberry Piで Deep Learningフレームワーク Chainerをインストールしてみる

ラズパイに Deep Learningのフレームワーク Chainerを入れてみた

Raspberry Piで DeepBeliefSDKをビルドして画像認識フレームワークを動かす方法

ラズパイに DeepBeliefSDKを入れて画像の物体認識を行なう

Raspberry Piで Microsoftの ELLをビルドする方法

ラズパイで Microsoftの ELL Embedded Learning Libraryをビルドしてみるテスト、ビルドするだけ

Raspberry Piで MXNet port of SSD Single Shot MultiBoxを動かして画像の物体検出をする方法

ラズパイで MXNet port of SSD Single Shot MultiBox Object Detectorで物体検出を行なってみる

Raspberry Piで Apache MXNet Incubatingをビルドする方法

ラズパイで Apache MXNet Incubatingをビルドしてみるテスト、ビルドするだけ

Raspberry Piで OpenCVの Haar Cascade Object Detectionでリアルタイムにカメラ映像の顔検出を行なってみる

ラズパイで OpenCVの Haar Cascade Object Detection Face & Eyeでリアルタイムでカメラ映像の顔検出をする方法

Raspberry Piで NNPACKをビルドする方法

ラズパイで NNPACKをビルドしてみるテスト、ビルドするだけ

Raspberry Pi 3の Linuxコンソール上で使用する各種コマンドまとめ

ラズパイの Raspbian OSのコマンドラインで使用する便利コマンド、負荷試験や CPUシリアル番号の確認方法等も

[HOME]

|

[BACK]

リンクフリー(連絡不要、ただしトップページ以外は Web構成の変更で移動する場合があります)

Copyright (c)

2018 FREE WING,Y.Sakamoto

Powered by 猫屋敷工房 & HTML Generator

http://www.neko.ne.jp/~freewing/raspberry_pi/raspberry_pi_install_tensorflow_deep_learning_framework_fail/