・2018/08/14

【成功版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

【成功版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

(ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法)

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

● Raspberry Piで Google DeepDreamを動かしてキモイ絵を量産する方法

DeepDreamを動かすには Caffeと言う Deep Learning Frameworkを使用します。

Raspberry Piで Google DeepDreamを動かして一時期流行したキモイ絵を量産します。

DeepDream - Wikipedia

DeepDreamで生成した画像の例(Wikipediaより引用)

・DeepDream

● Raspberry Piで Caffe Deep Learning Frameworkで DeepDreamする方法

・2018/08/04

【ビルド版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning Framework

ラズパイで Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

・2018/08/04

【インストール版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning

ラズパイで Caffe Deep Learning Frameworkをインストールして Deep Dreamを動かしてキモイ絵を生成する

・2018/08/06

Orange Pi PC 2の 64bitのチカラで DeepDreamしてキモイ絵を高速でモリモリ量産してみるテスト

OrangePi PC2に Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

● 下記は TensorFlowを最初に動かそうとして試行錯誤した【失敗版まとめ】です

・2018/08/10

【失敗版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法

●今回動かした Raspberry Pi Raspbian OSのバージョン

RASPBIAN STRETCH WITH DESKTOP

Version:June 2018

Release date: 2018-06-27

Kernel version: 4.14

pi@raspberrypi:~/pytorch $ uname -a

Linux raspberrypi 4.14.50-v7+ #1122 SMP Tue Jun 19 12:26:26 BST 2018 armv7l GNU/Linux

● Raspberry Piで TensorFlowをコマンドラインで簡単にインストールする

ちなみに TensorFlowは「テンソルフロー」と読みます。

TensorFlowは Machine Learning Frameworkで機械学習フレームワークとなります。

Installing TensorFlow on Raspbian

tensorflow/tensorflow

TensorFlowを Raspberry Piにインストールする場合、TensorFlow公式のコマンドラインでインストールする手順が一番簡単ですが、2018年 8月の現時点では pip3 install tensorflowした tensorflowのライブラリと、ラズパイの Pythonのバージョンとで不整合が有る感じで、RuntimeWarningが発生します。関連性は分かりませんが、結果 Bus errorで動きませんでした。

pip3 install tensorflowの動作対象の Pythonは Python 3.4

ラズパイの Pythonは Python 3.5

RuntimeWarningの内容

RuntimeWarning: compiletime version 3.4 of module 'tensorflow.python.framework.fast_tensor_util' does not match runtime version 3.5

と言う訳でラズパイで TensorFlowを動かす為の解決方法をググっていたら下記の TensorFlow for Armを見つけました。

このバージョンはラズパイの Python 3.5でも問題無く動きました。

● Raspberry Piで TensorFlow for Arm

lhelontra/tensorflow-on-arm

lhelontra/tensorflow-on-arm - Releases

● Tensorflow - 1.10.0 @lhelontra lhelontra released this Aug 13, 2018

#

sudo apt-get update

# Raspberry Pi3 = linux_armv7l

# cp35 = Python 3.5

# Tensorflow - 1.10.0 @lhelontra lhelontra released this Aug 13, 2018

wget https://github.com/lhelontra/tensorflow-on-arm/releases/download/v1.10.0/tensorflow-1.10.0-cp35-none-linux_armv7l.whl

# install tensorflow-1.10.0-cp35-none-linux_armv7l.whl

sudo pip3 install tensorflow-1.10.0-cp35-none-linux_armv7l.whl

● import tensorflowしても警告が出ません!

pi@raspberrypi:~/tf $ python3

Python 3.5.3 (default, Jan 19 2017, 14:11:04)

[GCC 6.3.0 20170124] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow

>>> print (tensorflow.__version__)

1.10.0

>>> quit()

ImportError: No module named 'cv2'

sudo pip3 install opencv-python

# Successfully installed opencv-python-3.4.2.17

● TensorFlowを Python版の DeepDreamで動かしたい場合 hjptriplebee/deep_dream_tensorflow版

deep_dream_tensorflowが動くかな?

【深度学习】谷歌deepdream原理及tensorflow实现 2017年09月07日 16:03:44

hjptriplebee/deep_dream_tensorflow - An implement of google deep dream with tensorflow

Requirement

Python3

OpenCV

tensorflow 1.0

cd

git clone https://github.com/hjptriplebee/deep_dream_tensorflow.git

cd deep_dream_tensorflow

python3 main.py --input nature_image.jpg --output nature_image_tf.jpg

python3 main.py --input paint.jpg --output paint_tf.jpg

わーい!ラズパイで TensorFlowが動いたー!TensorFlowで Deep Dreamの悪夢が見れました!

pi@raspberrypi:~/deep_dream_tensorflow $ python3 main.py --input nature_image.jpg --output nature_image_tf.jpg

octave num 1/4...

octave num 2/4...

octave num 3/4...

octave num 4/4...

2018-08-15 14:07:50.509282: W tensorflow/core/framework/allocator.cc:108] Allocation of 11197440 exceeds 10% of system memory.

2018-08-15 14:07:50.837633: W tensorflow/core/framework/allocator.cc:108] Allocation of 11360160 exceeds 10% of system memory.

2018-08-15 14:07:52.491073: W tensorflow/core/framework/allocator.cc:108] Allocation of 11197440 exceeds 10% of system memory.

2018-08-15 14:07:52.821370: W tensorflow/core/framework/allocator.cc:108] Allocation of 11360160 exceeds 10% of system memory.

2018-08-15 14:07:54.463653: W tensorflow/core/framework/allocator.cc:108] Allocation of 11197440 exceeds 10% of system memory.

・nature_image.jpg

.

.

pi@raspberrypi:~/deep_dream_tensorflow $ python3 main.py --input paint.jpg --output paint_tf.jpg

octave num 1/4...

octave num 2/4...

octave num 3/4...

2018-08-15 14:15:09.975978: W tensorflow/core/framework/allocator.cc:108] Allocation of 24111360 exceeds 10% of system memory.

2018-08-15 14:15:10.811640: W tensorflow/core/framework/allocator.cc:108] Allocation of 24372012 exceeds 10% of system memory.

2018-08-15 14:15:15.067951: W tensorflow/core/framework/allocator.cc:108] Allocation of 24111360 exceeds 10% of system memory.

2018-08-15 14:15:15.896440: W tensorflow/core/framework/allocator.cc:108] Allocation of 24372012 exceeds 10% of system memory.

2018-08-15 14:15:20.086619: W tensorflow/core/framework/allocator.cc:108] Allocation of 24111360 exceeds 10% of system memory.

octave num 4/4...

pi@raspberrypi:~/deep_dream_tensorflow $ ls -l *.jpg

-rw-r--r-- 1 pi pi 11976 Aug 15 14:03 nature_image.jpg

-rw-r--r-- 1 pi pi 54593 Aug 15 14:09 nature_image_tf.jpg

-rw-r--r-- 1 pi pi 562577 Aug 15 14:03 paint.jpg

-rw-r--r-- 1 pi pi 286166 Aug 15 14:26 paint_tf.jpg

・paint.jpg

.

.

● TensorFlowを Python版の DeepDreamで動かしたい場合 EdCo95/deepdream版

EdCo95/deepdream

Implementation of the DeepDream computer vision algorithm in TensorFlow, following the guide on the TensorFlow repo.

cd

mkdir tf

cd tf

git clone https://github.com/EdCo95/deepdream.git

cd deepdream

# ImportError: libf77blas.so.3: cannot open shared object file: No such file or directory

sudo apt-get -y install libatlas-base-dev

# simple_dreaming.pyで動かす mountain.jpgのサイズが大きすぎるので flowers.jpgをコピーする

wget https://github.com/google/deepdream/raw/master/flowers.jpg

# cp flowers.jpg mountain.jpg

# simple_dreaming.py

# mountain.jpg

python3 simple_dreaming.py

# -20.129911 -34.394054 21.560724 98.70986 176.24553 233.00037 290.1329 331.62225 379.3962 423.36096 472.0818 509.4819 544.9835 569.8744 608.2803 640.7252 666.15936 692.84314 715.25146 735.6415

# 結果が分からないので別のサンプルを動かす

# deep_dreaming.py

# mountain2.jpg

python3 deep_dreaming.py

# pi@raspberrypi:~/tf/deepdream $ python3 deep_dreaming.py

# . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

# out2.jpegを出力する

# -rw-r--r-- 1 pi pi 100919 Aug 16 11:30 out2.jpeg

# mountain.jpgのサイズが大きすぎるので mountain2.jpgをコピーする

cp mountain2.jpg mountain.jpg

# 別のサンプルを動かす

# laplacian_pyramid_dreaming.py

# mountain.jpg

python3 laplacian_pyramid_dreaming.py

# pi@raspberrypi:~/tf/deepdream $ python3 multiscale_dreaming.py

# . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

# out2.jpegを出力する

# -rw-r--r-- 1 pi pi 74427 Aug 16 11:40 out2.jpeg

simple_dreaming.pyと multiscale_dreaming.pyは画像の保存処理がコメントになっているので保存処理を追加します。

# simple_dreaming.py

def showarray(a, fmt="jpeg"):

a = np.uint8(np.clip(a, 0, 1) * 255)

f = BytesIO()

img = PIL.Image.fromarray(a) #.save(f, fmt)

img.save("simple_dreaming.jpg")

img.show()

# multiscale_dreaming.py

def showarray(a, fmt="jpeg"):

a = np.uint8(np.clip(a, 0, 1) * 255)

f = BytesIO()

img = PIL.Image.fromarray(a) #.save(f, fmt)

img.save("multiscale_dreaming.jpg")

img.show()

● EdCo95/deepdream版 DeepDreamの実行結果

deep_dreaming.py以外は実行結果の意味が分からないなあ。

元絵 https://github.com/EdCo95/deepdream/blob/master/mountain2.jpg

・simple_dreaming.py (実行結果の意味が分からない)

・deep_dreaming.py

・laplacian_pyramid_dreaming.py (実行結果の意味が分からない)

・multiscale_dreaming.py (実行結果の意味が分からない)

libatlas-base-devが無いと下記のエラーで動きません。

pi@raspberrypi:~/tf/deepdream $ python3 simple_dreaming.py

Traceback (most recent call last):

File "/usr/local/lib/python3.5/dist-packages/numpy/core/__init__.py", line 16, in <module>

from . import multiarray

ImportError: libf77blas.so.3: cannot open shared object file: No such file or directory

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "simple_dreaming.py", line 5, in <module>

import numpy as np

File "/usr/local/lib/python3.5/dist-packages/numpy/__init__.py", line 142, in <module>

from . import add_newdocs

File "/usr/local/lib/python3.5/dist-packages/numpy/add_newdocs.py", line 13, in <module>

from numpy.lib import add_newdoc

File "/usr/local/lib/python3.5/dist-packages/numpy/lib/__init__.py", line 8, in <module>

from .type_check import *

File "/usr/local/lib/python3.5/dist-packages/numpy/lib/type_check.py", line 11, in <module>

import numpy.core.numeric as _nx

File "/usr/local/lib/python3.5/dist-packages/numpy/core/__init__.py", line 26, in <module>

raise ImportError(msg)

ImportError:

Importing the multiarray numpy extension module failed. Most

likely you are trying to import a failed build of numpy.

If you're working with a numpy git repo, try `git clean -xdf` (removes all

files not under version control). Otherwise reinstall numpy.

Original error was: libf77blas.so.3: cannot open shared object file: No such file or directory

解決方法

sudo apt-get -y install libatlas-base-dev

● TensorFlowを Python版の DeepDreamで動かしたい場合 mtyka/deepdream_highres版

Pythonスクリプトが Python2用なので TensorFlowバイナリも Python2用の物をインストールします。

● Tensorflow - 1.10.0 @lhelontra lhelontra released this Aug 13, 2018

#

sudo apt-get update

# Pi 3 = linux_armv7l

# Tensorflow - 1.10.0 @lhelontra lhelontra released this Aug 13, 2018

wget https://github.com/lhelontra/tensorflow-on-arm/releases/download/v1.10.0/tensorflow-1.10.0-cp27-none-linux_armv7l.whl

# install tensorflow-1.10.0-cp27-none-linux_armv7l.whl

sudo pip install tensorflow-1.10.0-cp27-none-linux_armv7l.whl

cd

mkdir tf

cd tf

git clone https://github.com/mtyka/deepdream_highres.git

cd deepdream_highres

wget https://storage.googleapis.com/download.tensorflow.org/models/inception5h.zip

unzip -n inception5h.zip

wget https://github.com/google/deepdream/raw/master/flowers.jpg

# Python 2

python deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

# 動きましたが、実行途中でラズパイが落ちます

pi@raspberrypi:~ $ free -h

total used free shared buff/cache available

Mem: 976M 33M 872M 12M 70M 883M

Swap: 2.0G 0B 2.0G

pi@raspberrypi:~/tf/deepdream_highres $ python deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

--- Available Layers: ---

import/conv2d0_w Features/Channels: 64

import/conv2d0_b Features/Channels: 64

import/conv2d1_w Features/Channels: 64

import/conv2d1_b Features/Channels: 64

import/conv2d2_w Features/Channels: 192

import/conv2d2_b Features/Channels: 192

import/mixed3a_1x1_w Features/Channels: 64

import/mixed3a_1x1_b Features/Channels: 64

import/mixed3a_3x3_bottleneck_w Features/Channels: 96

import/mixed3a_3x3_bottleneck_b Features/Channels: 96

import/mixed3a_3x3_w Features/Channels: 128

import/mixed3a_3x3_b Features/Channels: 128

import/mixed3a_5x5_bottleneck_w Features/Channels: 16

import/mixed3a_5x5_bottleneck_b Features/Channels: 16

import/mixed3a_5x5_w Features/Channels: 32

import/mixed3a_5x5_b Features/Channels: 32

import/mixed3a_pool_reduce_w Features/Channels: 32

import/mixed3a_pool_reduce_b Features/Channels: 32

import/mixed3b_1x1_w Features/Channels: 128

import/mixed3b_1x1_b Features/Channels: 128

import/mixed3b_3x3_bottleneck_w Features/Channels: 128

import/mixed3b_3x3_bottleneck_b Features/Channels: 128

import/mixed3b_3x3_w Features/Channels: 192

import/mixed3b_3x3_b Features/Channels: 192

import/mixed3b_5x5_bottleneck_w Features/Channels: 32

import/mixed3b_5x5_bottleneck_b Features/Channels: 32

import/mixed3b_5x5_w Features/Channels: 96

import/mixed3b_5x5_b Features/Channels: 96

import/mixed3b_pool_reduce_w Features/Channels: 64

import/mixed3b_pool_reduce_b Features/Channels: 64

import/mixed4a_1x1_w Features/Channels: 192

import/mixed4a_1x1_b Features/Channels: 192

import/mixed4a_3x3_bottleneck_w Features/Channels: 96

import/mixed4a_3x3_bottleneck_b Features/Channels: 96

import/mixed4a_3x3_w Features/Channels: 204

import/mixed4a_3x3_b Features/Channels: 204

import/mixed4a_5x5_bottleneck_w Features/Channels: 16

import/mixed4a_5x5_bottleneck_b Features/Channels: 16

import/mixed4a_5x5_w Features/Channels: 48

import/mixed4a_5x5_b Features/Channels: 48

import/mixed4a_pool_reduce_w Features/Channels: 64

import/mixed4a_pool_reduce_b Features/Channels: 64

import/mixed4b_1x1_w Features/Channels: 160

import/mixed4b_1x1_b Features/Channels: 160

import/mixed4b_3x3_bottleneck_w Features/Channels: 112

import/mixed4b_3x3_bottleneck_b Features/Channels: 112

import/mixed4b_3x3_w Features/Channels: 224

import/mixed4b_3x3_b Features/Channels: 224

import/mixed4b_5x5_bottleneck_w Features/Channels: 24

import/mixed4b_5x5_bottleneck_b Features/Channels: 24

import/mixed4b_5x5_w Features/Channels: 64

import/mixed4b_5x5_b Features/Channels: 64

import/mixed4b_pool_reduce_w Features/Channels: 64

import/mixed4b_pool_reduce_b Features/Channels: 64

import/mixed4c_1x1_w Features/Channels: 128

import/mixed4c_1x1_b Features/Channels: 128

import/mixed4c_3x3_bottleneck_w Features/Channels: 128

import/mixed4c_3x3_bottleneck_b Features/Channels: 128

import/mixed4c_3x3_w Features/Channels: 256

import/mixed4c_3x3_b Features/Channels: 256

import/mixed4c_5x5_bottleneck_w Features/Channels: 24

import/mixed4c_5x5_bottleneck_b Features/Channels: 24

import/mixed4c_5x5_w Features/Channels: 64

import/mixed4c_5x5_b Features/Channels: 64

import/mixed4c_pool_reduce_w Features/Channels: 64

import/mixed4c_pool_reduce_b Features/Channels: 64

import/mixed4d_1x1_w Features/Channels: 112

import/mixed4d_1x1_b Features/Channels: 112

import/mixed4d_3x3_bottleneck_w Features/Channels: 144

import/mixed4d_3x3_bottleneck_b Features/Channels: 144

import/mixed4d_3x3_w Features/Channels: 288

import/mixed4d_3x3_b Features/Channels: 288

import/mixed4d_5x5_bottleneck_w Features/Channels: 32

import/mixed4d_5x5_bottleneck_b Features/Channels: 32

import/mixed4d_5x5_w Features/Channels: 64

import/mixed4d_5x5_b Features/Channels: 64

import/mixed4d_pool_reduce_w Features/Channels: 64

import/mixed4d_pool_reduce_b Features/Channels: 64

import/mixed4e_1x1_w Features/Channels: 256

import/mixed4e_1x1_b Features/Channels: 256

import/mixed4e_3x3_bottleneck_w Features/Channels: 160

import/mixed4e_3x3_bottleneck_b Features/Channels: 160

import/mixed4e_3x3_w Features/Channels: 320

import/mixed4e_3x3_b Features/Channels: 320

import/mixed4e_5x5_bottleneck_w Features/Channels: 32

import/mixed4e_5x5_bottleneck_b Features/Channels: 32

import/mixed4e_5x5_w Features/Channels: 128

import/mixed4e_5x5_b Features/Channels: 128

import/mixed4e_pool_reduce_w Features/Channels: 128

import/mixed4e_pool_reduce_b Features/Channels: 128

import/mixed5a_1x1_w Features/Channels: 256

import/mixed5a_1x1_b Features/Channels: 256

import/mixed5a_3x3_bottleneck_w Features/Channels: 160

import/mixed5a_3x3_bottleneck_b Features/Channels: 160

import/mixed5a_3x3_w Features/Channels: 320

import/mixed5a_3x3_b Features/Channels: 320

import/mixed5a_5x5_bottleneck_w Features/Channels: 48

import/mixed5a_5x5_bottleneck_b Features/Channels: 48

import/mixed5a_5x5_w Features/Channels: 128

import/mixed5a_5x5_b Features/Channels: 128

import/mixed5a_pool_reduce_w Features/Channels: 128

import/mixed5a_pool_reduce_b Features/Channels: 128

import/mixed5b_1x1_w Features/Channels: 384

import/mixed5b_1x1_b Features/Channels: 384

import/mixed5b_3x3_bottleneck_w Features/Channels: 192

import/mixed5b_3x3_bottleneck_b Features/Channels: 192

import/mixed5b_3x3_w Features/Channels: 384

import/mixed5b_3x3_b Features/Channels: 384

import/mixed5b_5x5_bottleneck_w Features/Channels: 48

import/mixed5b_5x5_bottleneck_b Features/Channels: 48

import/mixed5b_5x5_w Features/Channels: 128

import/mixed5b_5x5_b Features/Channels: 128

import/mixed5b_pool_reduce_w Features/Channels: 128

import/mixed5b_pool_reduce_b Features/Channels: 128

import/head0_bottleneck_w Features/Channels: 128

import/head0_bottleneck_b Features/Channels: 128

import/nn0_w Features/Channels: 1024

import/nn0_b Features/Channels: 1024

import/softmax0_w Features/Channels: 1008

import/softmax0_b Features/Channels: 1008

import/head1_bottleneck_w Features/Channels: 128

import/head1_bottleneck_b Features/Channels: 128

import/nn1_w Features/Channels: 1024

import/nn1_b Features/Channels: 1024

import/softmax1_w Features/Channels: 1008

import/softmax1_b Features/Channels: 1008

import/softmax2_w Features/Channels: 1008

import/softmax2_b Features/Channels: 1008

import/conv2d0_pre_relu/conv Features/Channels: 64

import/conv2d0_pre_relu Features/Channels: 64

import/conv2d0 Features/Channels: 64

import/maxpool0 Features/Channels: 64

import/localresponsenorm0 Features/Channels: 64

import/conv2d1_pre_relu/conv Features/Channels: 64

import/conv2d1_pre_relu Features/Channels: 64

import/conv2d1 Features/Channels: 64

import/conv2d2_pre_relu/conv Features/Channels: 192

import/conv2d2_pre_relu Features/Channels: 192

import/conv2d2 Features/Channels: 192

import/localresponsenorm1 Features/Channels: 192

import/maxpool1 Features/Channels: 192

import/mixed3a_1x1_pre_relu/conv Features/Channels: 64

import/mixed3a_1x1_pre_relu Features/Channels: 64

import/mixed3a_1x1 Features/Channels: 64

import/mixed3a_3x3_bottleneck_pre_relu/conv Features/Channels: 96

import/mixed3a_3x3_bottleneck_pre_relu Features/Channels: 96

import/mixed3a_3x3_bottleneck Features/Channels: 96

import/mixed3a_3x3_pre_relu/conv Features/Channels: 128

import/mixed3a_3x3_pre_relu Features/Channels: 128

import/mixed3a_3x3 Features/Channels: 128

import/mixed3a_5x5_bottleneck_pre_relu/conv Features/Channels: 16

import/mixed3a_5x5_bottleneck_pre_relu Features/Channels: 16

import/mixed3a_5x5_bottleneck Features/Channels: 16

import/mixed3a_5x5_pre_relu/conv Features/Channels: 32

import/mixed3a_5x5_pre_relu Features/Channels: 32

import/mixed3a_5x5 Features/Channels: 32

import/mixed3a_pool Features/Channels: 192

import/mixed3a_pool_reduce_pre_relu/conv Features/Channels: 32

import/mixed3a_pool_reduce_pre_relu Features/Channels: 32

import/mixed3a_pool_reduce Features/Channels: 32

import/mixed3a Features/Channels: 256

import/mixed3b_1x1_pre_relu/conv Features/Channels: 128

import/mixed3b_1x1_pre_relu Features/Channels: 128

import/mixed3b_1x1 Features/Channels: 128

import/mixed3b_3x3_bottleneck_pre_relu/conv Features/Channels: 128

import/mixed3b_3x3_bottleneck_pre_relu Features/Channels: 128

import/mixed3b_3x3_bottleneck Features/Channels: 128

import/mixed3b_3x3_pre_relu/conv Features/Channels: 192

import/mixed3b_3x3_pre_relu Features/Channels: 192

import/mixed3b_3x3 Features/Channels: 192

import/mixed3b_5x5_bottleneck_pre_relu/conv Features/Channels: 32

import/mixed3b_5x5_bottleneck_pre_relu Features/Channels: 32

import/mixed3b_5x5_bottleneck Features/Channels: 32

import/mixed3b_5x5_pre_relu/conv Features/Channels: 96

import/mixed3b_5x5_pre_relu Features/Channels: 96

import/mixed3b_5x5 Features/Channels: 96

import/mixed3b_pool Features/Channels: 256

import/mixed3b_pool_reduce_pre_relu/conv Features/Channels: 64

import/mixed3b_pool_reduce_pre_relu Features/Channels: 64

import/mixed3b_pool_reduce Features/Channels: 64

import/mixed3b Features/Channels: 480

import/maxpool4 Features/Channels: 480

import/mixed4a_1x1_pre_relu/conv Features/Channels: 192

import/mixed4a_1x1_pre_relu Features/Channels: 192

import/mixed4a_1x1 Features/Channels: 192

import/mixed4a_3x3_bottleneck_pre_relu/conv Features/Channels: 96

import/mixed4a_3x3_bottleneck_pre_relu Features/Channels: 96

import/mixed4a_3x3_bottleneck Features/Channels: 96

import/mixed4a_3x3_pre_relu/conv Features/Channels: 204

import/mixed4a_3x3_pre_relu Features/Channels: 204

import/mixed4a_3x3 Features/Channels: 204

import/mixed4a_5x5_bottleneck_pre_relu/conv Features/Channels: 16

import/mixed4a_5x5_bottleneck_pre_relu Features/Channels: 16

import/mixed4a_5x5_bottleneck Features/Channels: 16

import/mixed4a_5x5_pre_relu/conv Features/Channels: 48

import/mixed4a_5x5_pre_relu Features/Channels: 48

import/mixed4a_5x5 Features/Channels: 48

import/mixed4a_pool Features/Channels: 480

import/mixed4a_pool_reduce_pre_relu/conv Features/Channels: 64

import/mixed4a_pool_reduce_pre_relu Features/Channels: 64

import/mixed4a_pool_reduce Features/Channels: 64

import/mixed4a Features/Channels: 508

import/mixed4b_1x1_pre_relu/conv Features/Channels: 160

import/mixed4b_1x1_pre_relu Features/Channels: 160

import/mixed4b_1x1 Features/Channels: 160

import/mixed4b_3x3_bottleneck_pre_relu/conv Features/Channels: 112

import/mixed4b_3x3_bottleneck_pre_relu Features/Channels: 112

import/mixed4b_3x3_bottleneck Features/Channels: 112

import/mixed4b_3x3_pre_relu/conv Features/Channels: 224

import/mixed4b_3x3_pre_relu Features/Channels: 224

import/mixed4b_3x3 Features/Channels: 224

import/mixed4b_5x5_bottleneck_pre_relu/conv Features/Channels: 24

import/mixed4b_5x5_bottleneck_pre_relu Features/Channels: 24

import/mixed4b_5x5_bottleneck Features/Channels: 24

import/mixed4b_5x5_pre_relu/conv Features/Channels: 64

import/mixed4b_5x5_pre_relu Features/Channels: 64

import/mixed4b_5x5 Features/Channels: 64

import/mixed4b_pool Features/Channels: 508

import/mixed4b_pool_reduce_pre_relu/conv Features/Channels: 64

import/mixed4b_pool_reduce_pre_relu Features/Channels: 64

import/mixed4b_pool_reduce Features/Channels: 64

import/mixed4b Features/Channels: 512

import/mixed4c_1x1_pre_relu/conv Features/Channels: 128

import/mixed4c_1x1_pre_relu Features/Channels: 128

import/mixed4c_1x1 Features/Channels: 128

import/mixed4c_3x3_bottleneck_pre_relu/conv Features/Channels: 128

import/mixed4c_3x3_bottleneck_pre_relu Features/Channels: 128

import/mixed4c_3x3_bottleneck Features/Channels: 128

import/mixed4c_3x3_pre_relu/conv Features/Channels: 256

import/mixed4c_3x3_pre_relu Features/Channels: 256

import/mixed4c_3x3 Features/Channels: 256

import/mixed4c_5x5_bottleneck_pre_relu/conv Features/Channels: 24

import/mixed4c_5x5_bottleneck_pre_relu Features/Channels: 24

import/mixed4c_5x5_bottleneck Features/Channels: 24

import/mixed4c_5x5_pre_relu/conv Features/Channels: 64

import/mixed4c_5x5_pre_relu Features/Channels: 64

import/mixed4c_5x5 Features/Channels: 64

import/mixed4c_pool Features/Channels: 512

import/mixed4c_pool_reduce_pre_relu/conv Features/Channels: 64

import/mixed4c_pool_reduce_pre_relu Features/Channels: 64

import/mixed4c_pool_reduce Features/Channels: 64

import/mixed4c Features/Channels: 512

import/mixed4d_1x1_pre_relu/conv Features/Channels: 112

import/mixed4d_1x1_pre_relu Features/Channels: 112

import/mixed4d_1x1 Features/Channels: 112

import/mixed4d_3x3_bottleneck_pre_relu/conv Features/Channels: 144

import/mixed4d_3x3_bottleneck_pre_relu Features/Channels: 144

import/mixed4d_3x3_bottleneck Features/Channels: 144

import/mixed4d_3x3_pre_relu/conv Features/Channels: 288

import/mixed4d_3x3_pre_relu Features/Channels: 288

import/mixed4d_3x3 Features/Channels: 288

import/mixed4d_5x5_bottleneck_pre_relu/conv Features/Channels: 32

import/mixed4d_5x5_bottleneck_pre_relu Features/Channels: 32

import/mixed4d_5x5_bottleneck Features/Channels: 32

import/mixed4d_5x5_pre_relu/conv Features/Channels: 64

import/mixed4d_5x5_pre_relu Features/Channels: 64

import/mixed4d_5x5 Features/Channels: 64

import/mixed4d_pool Features/Channels: 512

import/mixed4d_pool_reduce_pre_relu/conv Features/Channels: 64

import/mixed4d_pool_reduce_pre_relu Features/Channels: 64

import/mixed4d_pool_reduce Features/Channels: 64

import/mixed4d Features/Channels: 528

import/mixed4e_1x1_pre_relu/conv Features/Channels: 256

import/mixed4e_1x1_pre_relu Features/Channels: 256

import/mixed4e_1x1 Features/Channels: 256

import/mixed4e_3x3_bottleneck_pre_relu/conv Features/Channels: 160

import/mixed4e_3x3_bottleneck_pre_relu Features/Channels: 160

import/mixed4e_3x3_bottleneck Features/Channels: 160

import/mixed4e_3x3_pre_relu/conv Features/Channels: 320

import/mixed4e_3x3_pre_relu Features/Channels: 320

import/mixed4e_3x3 Features/Channels: 320

import/mixed4e_5x5_bottleneck_pre_relu/conv Features/Channels: 32

import/mixed4e_5x5_bottleneck_pre_relu Features/Channels: 32

import/mixed4e_5x5_bottleneck Features/Channels: 32

import/mixed4e_5x5_pre_relu/conv Features/Channels: 128

import/mixed4e_5x5_pre_relu Features/Channels: 128

import/mixed4e_5x5 Features/Channels: 128

import/mixed4e_pool Features/Channels: 528

import/mixed4e_pool_reduce_pre_relu/conv Features/Channels: 128

import/mixed4e_pool_reduce_pre_relu Features/Channels: 128

import/mixed4e_pool_reduce Features/Channels: 128

import/mixed4e Features/Channels: 832

import/maxpool10 Features/Channels: 832

import/mixed5a_1x1_pre_relu/conv Features/Channels: 256

import/mixed5a_1x1_pre_relu Features/Channels: 256

import/mixed5a_1x1 Features/Channels: 256

import/mixed5a_3x3_bottleneck_pre_relu/conv Features/Channels: 160

import/mixed5a_3x3_bottleneck_pre_relu Features/Channels: 160

import/mixed5a_3x3_bottleneck Features/Channels: 160

import/mixed5a_3x3_pre_relu/conv Features/Channels: 320

import/mixed5a_3x3_pre_relu Features/Channels: 320

import/mixed5a_3x3 Features/Channels: 320

import/mixed5a_5x5_bottleneck_pre_relu/conv Features/Channels: 48

import/mixed5a_5x5_bottleneck_pre_relu Features/Channels: 48

import/mixed5a_5x5_bottleneck Features/Channels: 48

import/mixed5a_5x5_pre_relu/conv Features/Channels: 128

import/mixed5a_5x5_pre_relu Features/Channels: 128

import/mixed5a_5x5 Features/Channels: 128

import/mixed5a_pool Features/Channels: 832

import/mixed5a_pool_reduce_pre_relu/conv Features/Channels: 128

import/mixed5a_pool_reduce_pre_relu Features/Channels: 128

import/mixed5a_pool_reduce Features/Channels: 128

import/mixed5a Features/Channels: 832

import/mixed5b_1x1_pre_relu/conv Features/Channels: 384

import/mixed5b_1x1_pre_relu Features/Channels: 384

import/mixed5b_1x1 Features/Channels: 384

import/mixed5b_3x3_bottleneck_pre_relu/conv Features/Channels: 192

import/mixed5b_3x3_bottleneck_pre_relu Features/Channels: 192

import/mixed5b_3x3_bottleneck Features/Channels: 192

import/mixed5b_3x3_pre_relu/conv Features/Channels: 384

import/mixed5b_3x3_pre_relu Features/Channels: 384

import/mixed5b_3x3 Features/Channels: 384

import/mixed5b_5x5_bottleneck_pre_relu/conv Features/Channels: 48

import/mixed5b_5x5_bottleneck_pre_relu Features/Channels: 48

import/mixed5b_5x5_bottleneck Features/Channels: 48

import/mixed5b_5x5_pre_relu/conv Features/Channels: 128

import/mixed5b_5x5_pre_relu Features/Channels: 128

import/mixed5b_5x5 Features/Channels: 128

import/mixed5b_pool Features/Channels: 832

import/mixed5b_pool_reduce_pre_relu/conv Features/Channels: 128

import/mixed5b_pool_reduce_pre_relu Features/Channels: 128

import/mixed5b_pool_reduce Features/Channels: 128

import/mixed5b Features/Channels: 1024

import/avgpool0 Features/Channels: 1024

import/head0_pool Features/Channels: 508

import/head0_bottleneck_pre_relu/conv Features/Channels: 128

import/head0_bottleneck_pre_relu Features/Channels: 128

import/head0_bottleneck Features/Channels: 128

import/head0_bottleneck/reshape/shape Features/Channels: 2

import/head0_bottleneck/reshape Features/Channels: 2048

import/nn0_pre_relu/matmul Features/Channels: 1024

import/nn0_pre_relu Features/Channels: 1024

import/nn0 Features/Channels: 1024

import/nn0/reshape/shape Features/Channels: 2

import/nn0/reshape Features/Channels: 1024

import/softmax0_pre_activation/matmul Features/Channels: 1008

import/softmax0_pre_activation Features/Channels: 1008

import/softmax0 Features/Channels: 1008

import/head1_pool Features/Channels: 528

import/head1_bottleneck_pre_relu/conv Features/Channels: 128

import/head1_bottleneck_pre_relu Features/Channels: 128

import/head1_bottleneck Features/Channels: 128

import/head1_bottleneck/reshape/shape Features/Channels: 2

import/head1_bottleneck/reshape Features/Channels: 2048

import/nn1_pre_relu/matmul Features/Channels: 1024

import/nn1_pre_relu Features/Channels: 1024

import/nn1 Features/Channels: 1024

import/nn1/reshape/shape Features/Channels: 2

import/nn1/reshape Features/Channels: 1024

import/softmax1_pre_activation/matmul Features/Channels: 1008

import/softmax1_pre_activation Features/Channels: 1008

import/softmax1 Features/Channels: 1008

import/avgpool0/reshape/shape Features/Channels: 2

import/avgpool0/reshape Features/Channels: 1024

import/softmax2_pre_activation/matmul Features/Channels: 1008

import/softmax2_pre_activation Features/Channels: 1008

import/softmax2 Features/Channels: 1008

import/output Features/Channels: 1008

import/output1 Features/Channels: 1008

import/output2 Features/Channels: 1008

Number of layers 360

Total number of feature channels: 87322

Chosen layer:

name: "import/mixed5a_5x5_pre_relu"

op: "BiasAdd"

input: "import/mixed5a_5x5_pre_relu/conv"

input: "import/mixed5a_5x5_b"

device: "/device:CPU:0"

attr {

key: "T"

value {

type: DT_FLOAT

}

}

attr {

key: "data_format"

value {

s: "NHWC"

}

}

Cycle 0 Res: (240, 320, 3)

Octave: 0 Res: (10, 14, 3)

Octave: 1 Res: (10, 14, 3)

Octave: 2 Res: (15, 20, 3)

Octave: 3 Res: (22, 29, 3)

Octave: 4 Res: (31, 41, 3)

Octave: 5 Res: (44, 58, 3)

Octave: 6 Res: (62, 82, 3)

Octave: 7 Res: (87, 115, 3)

Octave: 8 Res: (122, 162, 3)

Octave: 9 Res: (171, 228, 3)

Saving 0

Cycle 1 Res: (240, 320, 3)

Octave: 0 Res: (10, 14, 3)

Octave: 1 Res: (10, 14, 3)

Octave: 2 Res: (15, 20, 3)

Octave: 3 Res: (22, 29, 3)

Octave: 4 Res: (31, 41, 3)

Octave: 5 Res: (44, 58, 3)

Octave: 6 Res: (62, 82, 3)

Octave: 7 Res: (87, 115, 3)

Octave: 8 Res: (122, 162, 3)

ここでラズパイが落ちる(試しに 2回実行して 2回とも)

元絵 https://github.com/google/deepdream/raw/master/flowers.jpg

python deepdream.py --input flowers.jpg --output output.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 10

・output.jpg_00000.jpg

python deepdream.py --input flowers.jpg --output output_m3b.jpg --layer import/mixed3b_5x5_pre_relu --frames 7 --octaves 5 --iterations 5

これは最後まで(7枚生成)動いた

・output_m3b.jpg_00000.jpg

・output_m3b.jpg_00001.jpg

・output_m3b.jpg_00002.jpg

・output_m3b.jpg_00003.jpg

・output_m3b.jpg_00004.jpg

・output_m3b.jpg_00005.jpg

・output_m3b.jpg_00006.jpg

python deepdream.py --input flowers.jpg --output output_m4c.jpg --layer import/mixed4c_5x5_pre_relu --frames 7 --octaves 5 --iterations 5

これは最後まで(7枚生成)動いた

・output_m4c.jpg_00000.jpg

・output_m4c.jpg_00001.jpg

・output_m4c.jpg_00002.jpg

・output_m4c.jpg_00003.jpg

・output_m4c.jpg_00004.jpg

・output_m4c.jpg_00005.jpg

・output_m4c.jpg_00006.jpg

python deepdream.py --input flowers.jpg --output output_m5a.jpg --layer import/mixed5a_5x5_pre_relu --frames 7 --octaves 10 --iterations 5

これは最後まで(7枚生成)動いた

・output_m5a.jpg_00000.jpg

・output_m5a.jpg_00001.jpg

・output_m5a.jpg_00002.jpg

・output_m5a.jpg_00003.jpg

・output_m5a.jpg_00004.jpg

・output_m5a.jpg_00005.jpg

・output_m5a.jpg_00006.jpg

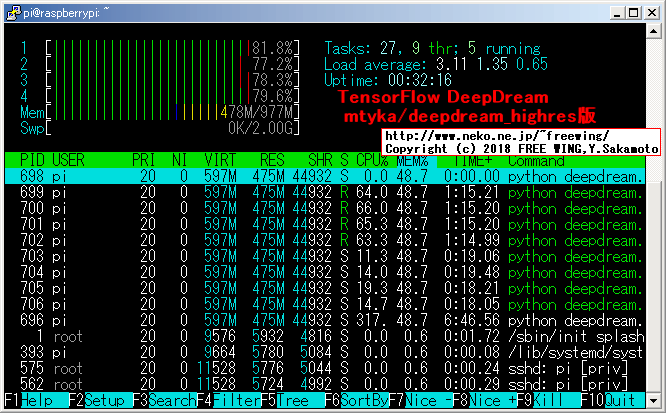

・TensorFlowで Python版の DeepDreamで動かした場合のメモリ使用状況 mtyka/deepdream_highres版

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

●関連するコンテンツ(この記事を読んだ人は、次の記事も読んでいます)

NVIDIA Jetson Nano 開発者キットを買ってみた。メモリ容量 4GB LPDDR4 RAM

Jetson Nanoで TensorFlow PyTorch Caffe/Caffe2 Keras MXNet等を GPUパワーで超高速で動かす!

Raspberry Piでメモリを馬鹿食いするアプリ用に不要なサービスを停止してフリーメモリを増やす方法

ラズパイでメモリを沢山使用するビルドやアプリ用に不要なサービス等を停止して使えるメインメモリを増やす

【成功版】最新版の Darknetに digitalbrain79版の Darknet with NNPACKの NNPACK処理を適用する

ラズパイで NNPACK対応の最新版の Darknetを動かして超高速で物体検出や DeepDreamの悪夢を見る

【成功版】Raspberry Piで NNPACK対応版の Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet NNPACK darknet-nnpackをソースからビルドして物体検出を行なう方法

【成功版】Raspberry Piで Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet Neural Network Frameworkを入れて物体検出や悪夢のグロ画像を生成する

Raspberry Piで TensorFlow Deep Learning Frameworkを自己ビルドする方法

ラズパイで TensorFlow Deep Learning Frameworkを自己ビルドする方法

Raspberry Piで Caffe Deep Learning Frameworkで物体認識を行なってみるテスト

ラズパイで Caffe Deep Learning Frameworkを動かして物体認識を行なってみる

【ビルド版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning Framework

ラズパイで Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

【インストール版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning

ラズパイで Caffe Deep Learning Frameworkをインストールして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piで Caffe2 Deep Learning Frameworkをソースコードからビルドする方法

ラズパイで Caffe 2 Deep Learning Frameworkをソースコードから自己ビルドする方法

Orange Pi PC 2の 64bitのチカラで DeepDreamしてキモイ絵を高速でモリモリ量産してみるテスト

OrangePi PC2に Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piに Jupyter Notebookをインストールして拡張子 ipynb形式の IPythonを動かす

ラズパイに IPython Notebookをインストールして Google DeepDream dream.ipynbを動かす

Raspberry Piで Deep Learningフレームワーク Chainerをインストールしてみる

ラズパイに Deep Learningのフレームワーク Chainerを入れてみた

Raspberry Piで DeepBeliefSDKをビルドして画像認識フレームワークを動かす方法

ラズパイに DeepBeliefSDKを入れて画像の物体認識を行なう

Raspberry Piで Microsoftの ELLをビルドする方法

ラズパイで Microsoftの ELL Embedded Learning Libraryをビルドしてみるテスト、ビルドするだけ

Raspberry Piで MXNet port of SSD Single Shot MultiBoxを動かして画像の物体検出をする方法

ラズパイで MXNet port of SSD Single Shot MultiBox Object Detectorで物体検出を行なってみる

Raspberry Piで Apache MXNet Incubatingをビルドする方法

ラズパイで Apache MXNet Incubatingをビルドしてみるテスト、ビルドするだけ

Raspberry Piで OpenCVの Haar Cascade Object Detectionでリアルタイムにカメラ映像の顔検出を行なってみる

ラズパイで OpenCVの Haar Cascade Object Detection Face & Eyeでリアルタイムでカメラ映像の顔検出をする方法

Raspberry Piで NNPACKをビルドする方法

ラズパイで NNPACKをビルドしてみるテスト、ビルドするだけ

Raspberry Pi 3の Linuxコンソール上で使用する各種コマンドまとめ

ラズパイの Raspbian OSのコマンドラインで使用する便利コマンド、負荷試験や CPUシリアル番号の確認方法等も

[HOME]

|

[BACK]

リンクフリー(連絡不要、ただしトップページ以外は Web構成の変更で移動する場合があります)

Copyright (c)

2018 FREE WING,Y.Sakamoto

Powered by 猫屋敷工房 & HTML Generator

http://www.neko.ne.jp/~freewing/raspberry_pi/raspberry_pi_install_tensorflow_deep_learning_framework/

.

.

.

.