・2018/08/27

【成功版】最新版の Darknetに digitalbrain79版の Darknet with NNPACKの NNPACK処理を適用する

【成功版】最新版の Darknetに digitalbrain79版の Darknet with NNPACKの NNPACK処理を適用する

(ラズパイで NNPACK対応の最新版の Darknetを動かして超高速で物体検出や DeepDreamの悪夢を見る)

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

● Raspberry Piで 最新版の Darknet with NNPACKをビルドする方法

Raspberry Piで 最新版の Darknet with NNPACKをビルドする方法

Darknet with NNPACK

digitalbrain79版の Darknet with NNPACKは 2017/11の版から更新が止まっています。

元となっている pjreddie版の Darknetは最近も更新しています。

と言う訳で、最新の pjreddie版の Darknetに digitalbrain79版の NNPACKの処理を適用して、最新版の Darknet with NNPACKを Raspberry Piで動かしてヒャッハーします。

同様に、最新の Maratyszcza版の NNPACKにも digitalbrain79版の biasパッチを適用します。

なお、細かい部分でも手作業で最適化を行いました。

●今回動かした Raspberry Pi Raspbian OSのバージョン

RASPBIAN STRETCH WITH DESKTOP

Version:June 2018

Release date: 2018-06-27

Kernel version: 4.14

pi@raspberrypi:~/pytorch $ uname -a

Linux raspberrypi 4.14.50-v7+ #1122 SMP Tue Jun 19 12:26:26 BST 2018 armv7l GNU/Linux

● digitalbrain79版の Darknet with NNPACKの NNPACK処理を最新版の Darknetに適用しました

Darknet with NNPACK

digitalbrain79版の Darknet with NNPACKの NNPACK処理を最新版の Darknetに適用しました。

digitalbrain79/NNPACK-darknet

digitalbrain79/darknet-nnpack

digitalbrain79版の元になった

・Maratyszcza/NNPACK

・pjreddie/darknet

の最新版に digitalbrain79版の Darknet with NNPACKのパッチを当てたブランチを作成しました。

FREEWING-JP/NNPACK_for_Darknet

FREEWING-JP/darknet_nnpack_for_raspberry_pi3

※ デフォルトブランチを masterから変更しています。

# お決まりの sudo apt-get updateで最新状態に更新する

sudo apt-get update

# Initial Setup

sudo apt-get -y install ninja-build

sudo pip install --upgrade git+https://github.com/Maratyszcza/PeachPy

sudo pip install --upgrade git+https://github.com/Maratyszcza/confu

# Build NNPACK-darknet

cd

mkdir fw

cd fw

# git clone https://github.com/digitalbrain79/NNPACK-darknet.git

# cd NNPACK-darknet

# Patch digitalbrain79 Add bias when bias is not NULL

git clone https://github.com/FREEWING-JP/NNPACK_for_Darknet.git

cd NNPACK_for_Darknet

#

confu setup

python ./configure.py --backend auto

# 2コアでビルドで 10分

ninja -j2

# real 9m51.718s

# user 13m19.744s

# sys 0m26.458s

#

sudo cp -a lib/* /usr/lib/

# */

sudo cp include/nnpack.h /usr/include/

sudo cp deps/pthreadpool/include/pthreadpool.h /usr/include/

# Build darknet-nnpack

# https://github.com/digitalbrain79/darknet-nnpack.git

cd

cd fw

# git clone https://github.com/digitalbrain79/darknet-nnpack.git

# Patch digitalbrain79 Darknet with NNPACK diff

git clone https://github.com/FREEWING-JP/darknet_nnpack_for_raspberry_pi3.git

cd darknet_nnpack_for_raspberry_pi3

# 2コアでビルドで 1分 12秒

make -j2

# real 1m12.131s

# user 1m34.961s

# sys 0m3.702s

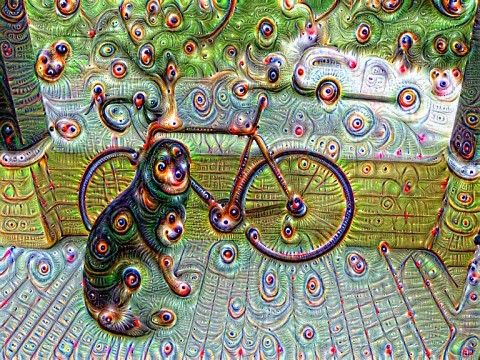

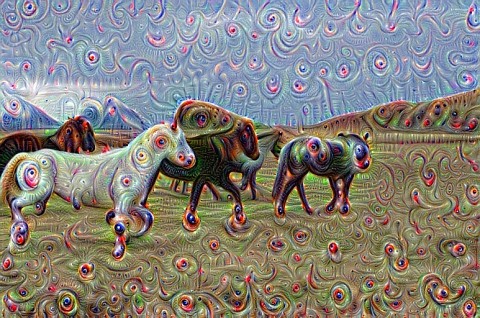

# Nightmare 悪夢

# jnet-conv.weights (72MB)

wget http://pjreddie.com/media/files/jnet-conv.weights

# wget https://raw.githubusercontent.com/pjreddie/darknet/master/cfg/jnet-conv.cfg

# mv jnet-conv.cfg ./cfg/

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/horses.jpg 11 -rounds 4 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/eagle.jpg 11 -rounds 2 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 13 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 12 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 11 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 10 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 9 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 8 -rounds 1 -range 3

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/dog.jpg 7 -rounds 1 -range 3

# vgg-conv.weights (56M)

wget http://pjreddie.com/media/files/vgg-conv.weights

./darknet nightmare cfg/vgg-conv.cfg vgg-conv.weights data/scream.jpg 10

# Object Detection 物体検出

# yolov2.weights (194MB)

wget https://pjreddie.com/media/files/yolov2.weights

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

# yolov2-tiny-voc.weights (61MB)

wget https://pjreddie.com/media/files/yolov2-tiny-voc.weights

./darknet detector test cfg/voc.data cfg/yolov2-tiny-voc.cfg yolov2-tiny-voc.weights data/dog.jpg

# https://pjreddie.com/darknet/yolo/

# YOLOv3-320 COCO trainval yolov3.cfg yolov3.weights (237M)

wget https://pjreddie.com/media/files/yolov3.weights

./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

# 26 Segmentation fault

./darknet detector test cfg/coco.data cfg/yolov3.cfg yolov3.weights data/dog.jpg

# 39 Segmentation fault

# https://github.com/pjreddie/darknet/issues/823

# Is this problem about big endian or little endian for model saving?

# YOLOv3-tiny COCO trainval yolov3-tiny.cfg yolov3-tiny.weights (34M)

wget https://pjreddie.com/media/files/yolov3-tiny.weights

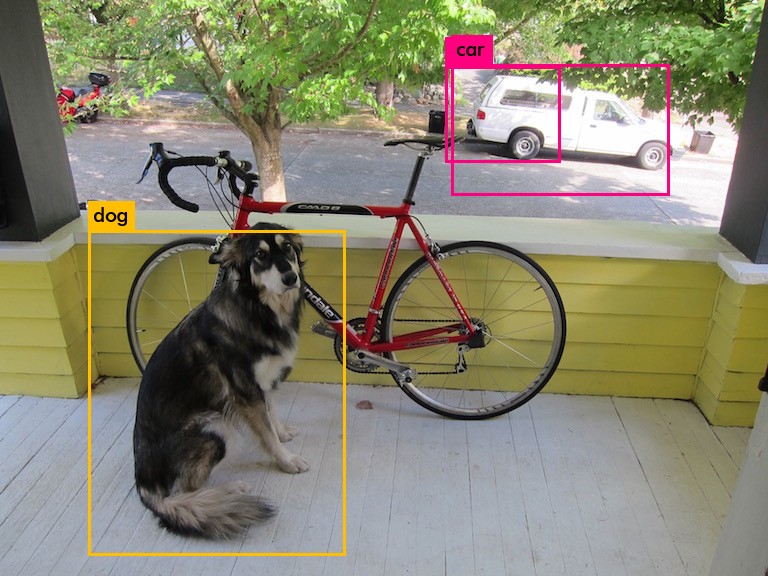

./darknet detect cfg/yolov3-tiny.cfg yolov3-tiny.weights data/dog.jpg

data/dog.jpg: Predicted in 0.982928 seconds.

dog: 57%

car: 52%

truck: 56%

car: 62%

bicycle: 59%

pi@raspberrypi:~/fw/darknet $ ./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

layer filters size input output

0 conv 32 3 x 3 / 1 608 x 608 x 3 -> 608 x 608 x 32 0.639 BFLOPs

1 max 2 x 2 / 2 608 x 608 x 32 -> 304 x 304 x 32

2 conv 64 3 x 3 / 1 304 x 304 x 32 -> 304 x 304 x 64 3.407 BFLOPs

3 max 2 x 2 / 2 304 x 304 x 64 -> 152 x 152 x 64

4 conv 128 3 x 3 / 1 152 x 152 x 64 -> 152 x 152 x 128 3.407 BFLOPs

5 conv 64 1 x 1 / 1 152 x 152 x 128 -> 152 x 152 x 64 0.379 BFLOPs

6 conv 128 3 x 3 / 1 152 x 152 x 64 -> 152 x 152 x 128 3.407 BFLOPs

7 max 2 x 2 / 2 152 x 152 x 128 -> 76 x 76 x 128

8 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs

9 conv 128 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BFLOPs

10 conv 256 3 x 3 / 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BFLOPs

11 max 2 x 2 / 2 76 x 76 x 256 -> 38 x 38 x 256

12 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs

13 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs

14 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs

15 conv 256 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BFLOPs

16 conv 512 3 x 3 / 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BFLOPs

17 max 2 x 2 / 2 38 x 38 x 512 -> 19 x 19 x 512

18 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs

19 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs

20 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs

21 conv 512 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BFLOPs

22 conv 1024 3 x 3 / 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BFLOPs

23 conv 1024 3 x 3 / 1 19 x 19 x1024 -> 19 x 19 x1024 6.814 BFLOPs

24 conv 1024 3 x 3 / 1 19 x 19 x1024 -> 19 x 19 x1024 6.814 BFLOPs

25 route 16

26 conv 64 1 x 1 / 1 38 x 38 x 512 -> 38 x 38 x 64 0.095 BFLOPs

27 reorg / 2 38 x 38 x 64 -> 19 x 19 x 256

28 route 27 24

29 conv 1024 3 x 3 / 1 19 x 19 x1280 -> 19 x 19 x1024 8.517 BFLOPs

30 conv 425 1 x 1 / 1 19 x 19 x1024 -> 19 x 19 x 425 0.314 BFLOPs

31 detection

mask_scale: Using default '1.000000'

Loading weights from yolov2.weights...Done!

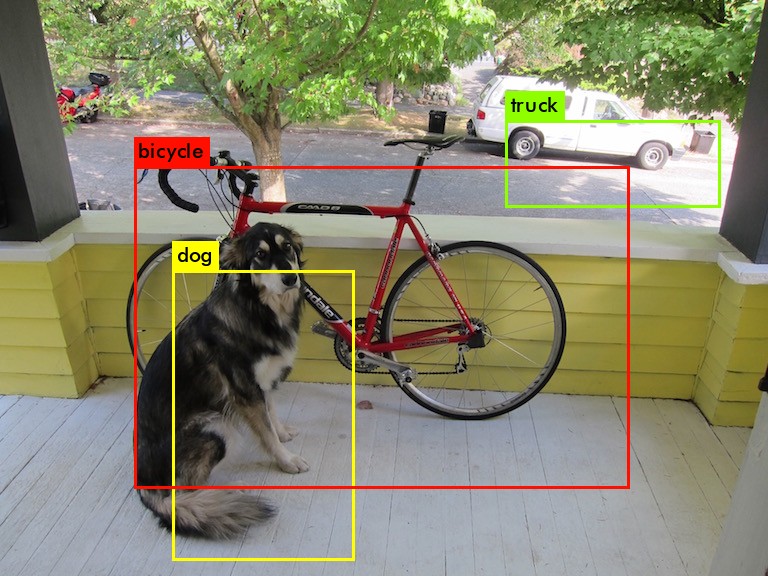

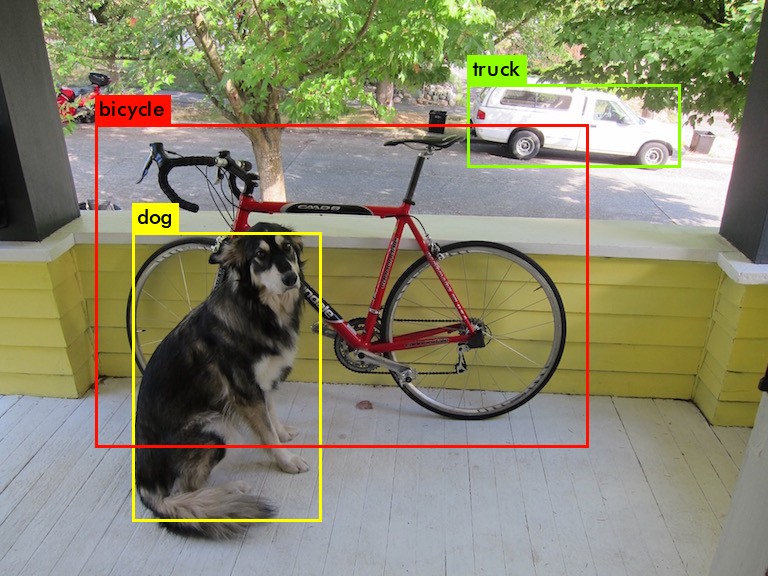

data/dog.jpg: Predicted in 9.017948 seconds.

dog: 83%

truck: 79%

bicycle: 84%

・./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

pi@raspberrypi:~/fw/darknet $ ./darknet detector test cfg/voc.data cfg/yolov2-tiny-voc.cfg yolov2-tiny-voc.weights data/dog.jpg

layer filters size input output

0 conv 16 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 16 0.150 BFLOPs

1 max 2 x 2 / 2 416 x 416 x 16 -> 208 x 208 x 16

2 conv 32 3 x 3 / 1 208 x 208 x 16 -> 208 x 208 x 32 0.399 BFLOPs

3 max 2 x 2 / 2 208 x 208 x 32 -> 104 x 104 x 32

4 conv 64 3 x 3 / 1 104 x 104 x 32 -> 104 x 104 x 64 0.399 BFLOPs

5 max 2 x 2 / 2 104 x 104 x 64 -> 52 x 52 x 64

6 conv 128 3 x 3 / 1 52 x 52 x 64 -> 52 x 52 x 128 0.399 BFLOPs

7 max 2 x 2 / 2 52 x 52 x 128 -> 26 x 26 x 128

8 conv 256 3 x 3 / 1 26 x 26 x 128 -> 26 x 26 x 256 0.399 BFLOPs

9 max 2 x 2 / 2 26 x 26 x 256 -> 13 x 13 x 256

10 conv 512 3 x 3 / 1 13 x 13 x 256 -> 13 x 13 x 512 0.399 BFLOPs

11 max 2 x 2 / 1 13 x 13 x 512 -> 13 x 13 x 512

12 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

13 conv 1024 3 x 3 / 1 13 x 13 x1024 -> 13 x 13 x1024 3.190 BFLOPs

14 conv 125 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 125 0.043 BFLOPs

15 detection

mask_scale: Using default '1.000000'

Loading weights from yolov2-tiny-voc.weights...Done!

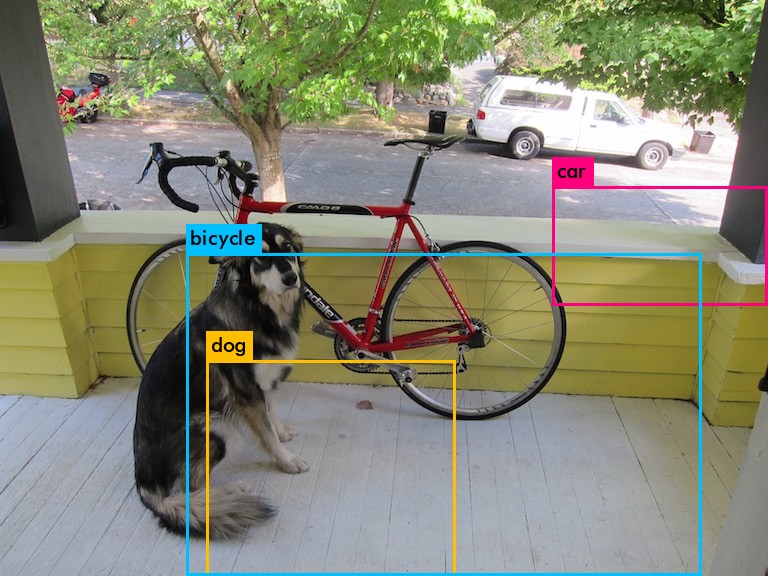

data/dog.jpg: Predicted in 1.328894 seconds.

dog: 83%

car: 56%

bicycle: 59%

・./darknet detector test cfg/voc.data cfg/yolov2-tiny-voc.cfg yolov2-tiny-voc.weights data/dog.jpg

maxpool_layer.cを修正後(物体検出の四角の枠の描画位置が正しくなりました)

・./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

・./darknet detector test cfg/voc.data cfg/yolov2-tiny-voc.cfg yolov2-tiny-voc.weights data/dog.jpg

● Raspberry Pi 3での digitalbrain79 Darknet with NNPACKのベンチマーク

● Raspberry Pi 3 Model B、Darknet with NNPACK benchmark

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

| optimize | gemm.c | OpenMP | ARM NEON | NNPACK | Time(秒) |

| No optimize | - | - | - | - | 445 sec |

| gemm only | * | - | - | - | 382 sec |

| OpenMP only | - | * | - | - | 141 sec |

| gemm + OpenMP | * | * | - | - | 128 sec |

| + ARM NEON | * | * | * | - | 83 sec |

| + NNPACK | * | * | * | * | 12 sec |

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=0

DEBUG=0

NNPACK=0

ARM_NEON=0

STBI_NEON=0

No optimize

data/dog.jpg: Predicted in 445.699451 seconds.

data/dog.jpg: Predicted in 452.022224 seconds.

data/dog.jpg: Predicted in 450.958672 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● gemm.c optimized

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=0

DEBUG=0

NNPACK=0

ARM_NEON=0

STBI_NEON=0

gemm.c optimized

data/dog.jpg: Predicted in 418.481606 seconds.

data/dog.jpg: Predicted in 382.948527 seconds.

data/dog.jpg: Predicted in 400.786624 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● OpenMP

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=1

DEBUG=0

NNPACK=0

ARM_NEON=0

STBI_NEON=0

data/dog.jpg: Predicted in 141.379768 seconds.

data/dog.jpg: Predicted in 144.121333 seconds.

data/dog.jpg: Predicted in 142.067783 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● OpenMP + gemm.c optimized

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=1

DEBUG=0

NNPACK=0

ARM_NEON=0

STBI_NEON=0

OpenMP + gemm.c optimized

data/dog.jpg: Predicted in 134.488275 seconds.

data/dog.jpg: Predicted in 128.075909 seconds.

data/dog.jpg: Predicted in 129.822826 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● ARM NEON + OpenMP + gemm.c optimized

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=1

DEBUG=0

NNPACK=0

ARM_NEON=1

STBI_NEON=1

ARM NEON + OpenMP + gemm.c optimized

data/dog.jpg: Predicted in 85.205355 seconds.

data/dog.jpg: Predicted in 83.948154 seconds.

data/dog.jpg: Predicted in 84.931311 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● NNPACK + ARM NEON + OpenMP + gemm.c optimized

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Makefile

GPU=0

CUDNN=0

OPENCV=0

OPENMP=1

DEBUG=0

NNPACK=1

ARM_NEON=1

STBI_NEON=1

NNPACK + ARM NEON + OpenMP + gemm.c optimized

data/dog.jpg: Predicted in 12.674640 seconds.

data/dog.jpg: Predicted in 13.312412 seconds.

data/dog.jpg: Predicted in 12.714760 seconds.

dog: 82%

truck: 64%

bicycle: 85%

● Raspberry Pi 3B Darknet with NNPACK benchmark 2

nightmare scream

time ./darknet nightmare cfg/vgg-conv.cfg vgg-conv.weights data/scream.jpg 10

| optimize | gemm.c | OpenMP | ARM NEON | NNPACK | Time(分) |

| No optimize | - | - | - | - | ??? min |

| gemm only | * | - | - | - | ??? min |

| gemm + NNPACK | * | - | - | * | 87 min |

| + OpenMP | * | * | - | * | 28 min |

| + ARM NEON | * | * | * | * | 22 min |

OPENMP=0

NNPACK=1

ARM_NEON=0

STBI_NEON=0

real 87m13.582s

user 90m45.465s

sys 0m7.923s

OPENMP=1

NNPACK=1

ARM_NEON=0

STBI_NEON=0

real 28m32.102s

user 103m11.944s

sys 0m9.695s

OPENMP=1

NNPACK=1

ARM_NEON=1

STBI_NEON=1

real 22m18.132s

user 78m47.156s

sys 0m11.322s

real 22m21.919s

user 78m21.531s

sys 0m10.831s

下記はラズパイ3B+の cpu-infoの結果

Pi3B = Broadcom BCM2837, Cortex-A53 v8-A, Quad-Core(32bit mode)

# CPU INFOrmation library

# https://github.com/pytorch/cpuinfo

pi@raspberrypi:~/cpuinfo $ uname -a

Linux raspberrypi 4.14.50-v7+ #1122 SMP Tue Jun 19 12:26:26 BST 2018 armv7l GNU/Linux

pi@raspberrypi:~/cpuinfo $ bin/cpu-info

Packages:

0: Unknown

Cores:

0: 1 processor (0), ARM Cortex-A53

1: 1 processor (1), ARM Cortex-A53

2: 1 processor (2), ARM Cortex-A53

3: 1 processor (3), ARM Cortex-A53

Logical processors:

0

1

2

3

pi@raspberrypi:~/cpuinfo $ bin/isa-info

Instruction sets:

Thumb: yes

Thumb 2: yes

ARMv5E: yes

ARMv6: yes

ARMv6-K: yes

ARMv7: yes

ARMv7 MP: yes

IDIV: yes

Floating-Point support:

VFPv2: no

VFPv3: yes

VFPv3+D32: yes

VFPv3+FP16: yes

VFPv3+FP16+D32: yes

VFPv4: yes

VFPv4+D32: yes

VJCVT: no

SIMD extensions:

WMMX: no

WMMX 2: no

NEON: yes

NEON-FP16: yes

NEON-FMA: yes

NEON VQRDMLAH/VQRDMLSH: no

NEON FP16 arithmetics: no

NEON complex: no

Cryptography extensions:

AES: no

SHA1: no

SHA2: no

PMULL: no

CRC32: yes

pi@raspberrypi:~/cpuinfo $ bin/cache-info

L1 instruction cache: 4 x 16 KB, 2-way set associative (128 sets), 64 byte lines, shared by 1 processors

L1 data cache: 4 x 16 KB, 4-way set associative (64 sets), 64 byte lines, shared by 1 processors

L2 data cache: 256 KB (exclusive), 16-way set associative (256 sets), 64 byte lines, shared by 4 processors

● Orange Pi PC2 ARM 64bitでの digitalbrain79 Darknet with NNPACKのベンチマーク

● Orange Pi PC 2 ARM 64bit、Darknet with NNPACK benchmark

Orange Pi PC2 = Allwinner H5 sun50i, Cortex-A53 v8-A, Quad-Core

user@orangepipc2:~/cpuinfo$ uname -a

Linux orangepipc2 4.14.15-sunxi64 #30 SMP Tue Jan 30 17:40:12 CET 2018 aarch64 GNU/Linux

wget https://pjreddie.com/media/files/yolov2.weights

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Loading weights from yolov2.weights...Done!

data/dog.jpg: Predicted in 9.788583 seconds.

dog: 82%

truck: 64%

bicycle: 85%

# Nightmare

wget http://pjreddie.com/media/files/vgg-conv.weights

./darknet nightmare cfg/vgg-conv.cfg vgg-conv.weights data/scream.jpg 10

real 15m39.909s

user 58m5.198s

sys 0m5.351s

real 15m50.974s

user 58m40.321s

sys 0m5.267s

wget http://pjreddie.com/media/files/jnet-conv.weights

./darknet nightmare cfg/jnet-conv.cfg jnet-conv.weights data/horses.jpg 11 -rounds 4 -range 3

16 conv 1024 3 x 3 / 1 1 x 1 x1024 -> 1 x 1 x1024 0.019 BFLOPs

17 max 2 x 2 / 2 1 x 1 x1024 -> 1 x 1 x1024

Loading weights from jnet-conv.weights...Done!

Iteration: 0,

net: 773 512 1187328 im: 773 512 3

Killed

wget https://pjreddie.com/media/files/yolov2.weights

./darknet detector test cfg/coco.data cfg/yolov2.cfg yolov2.weights data/dog.jpg

Loading weights from yolov2.weights...Done!

data/dog.jpg: Predicted in 9.667782 seconds.

dog: 82%

truck: 64%

bicycle: 85%

wget https://pjreddie.com/media/files/yolov2-tiny-voc.weights

./darknet detector test cfg/voc.data cfg/yolov2-tiny-voc.cfg yolov2-tiny-voc.weights data/dog.jpg

Loading weights from yolov2-tiny-voc.weights...Done!

data/dog.jpg: Predicted in 1.497692 seconds.

dog: 78%

car: 55%

car: 50%

wget https://pjreddie.com/media/files/yolov3.weights

./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

105 conv 255 1 x 1 / 1 76 x 76 x 256 -> 76 x 76 x 255 0.754 BFLOPs

106 yolo

Loading weights from yolov3.weights...Done!

Killed

wget https://pjreddie.com/media/files/yolov3-tiny.weights

./darknet detect cfg/yolov3-tiny.cfg yolov3-tiny.weights data/dog.jpg

22 conv 255 1 x 1 / 1 26 x 26 x 256 -> 26 x 26 x 255 0.088 BFLOPs

23 yolo

Loading weights from yolov3-tiny.weights...Done!

data/dog.jpg: Predicted in 1.309047 seconds.

dog: 57%

car: 52%

truck: 56%

car: 62%

bicycle: 59%

# CPU INFOrmation library

# https://github.com/pytorch/cpuinfo

cd

git clone https://github.com/pytorch/cpuinfo.git

cd cpuinfo

confu setup

python configure.py

ninja

bin/cpu-info

bin/isa-info

bin/cache-info

user@orangepipc2:~/cpuinfo$ bin/cpu-info

Packages:

0: Unknown

Cores:

0: 1 processor (0), ARM Cortex-A53

1: 1 processor (1), ARM Cortex-A53

2: 1 processor (2), ARM Cortex-A53

3: 1 processor (3), ARM Cortex-A53

Logical processors:

0

1

2

3

user@orangepipc2:~/cpuinfo$ bin/isa-info

Instruction sets:

ARM v8.1 atomics: no

ARM v8.1 SQRDMLxH: no

ARM v8.2 FP16 arithmetics: no

ARM v8.3 JS conversion: no

ARM v8.3 complex: no

Cryptography extensions:

AES: yes

SHA1: yes

SHA2: yes

PMULL: yes

CRC32: yes

user@orangepipc2:~/cpuinfo$ bin/cache-info

L1 instruction cache: 4 x 16 KB, 2-way set associative (128 sets), 64 byte lines, shared by 1 processors

L1 data cache: 4 x 16 KB, 4-way set associative (64 sets), 64 byte lines, shared by 1 processors

L2 data cache: 256 KB (exclusive), 16-way set associative (256 sets), 64 byte lines, shared by 4 processors

● Darknet demo

# Real-Time Detection on a Webcam

./darknet detector demo cfg/coco.data cfg/yolov3.cfg yolov3.weights

# Demo needs OpenCV for webcam images.

# Install OpenCV lib.

sudo apt-get -y install libopencv-dev

# Setting up libopencv-dev (2.4.9.1+dfsg1-2) ...

sudo apt-get -y install libcv-dev libcvaux-dev libhighgui-dev

# 0 upgraded, 0 newly installed, 0 to remove and 68 not upgraded.

# nano Makefile

# OPENCV=1

make -j2

./darknet detector demo cfg/coco.data cfg/yolov3.cfg yolov3.weights

# 35 Segmentation fault

# YOLOv2

./darknet detector demo cfg/coco.data cfg/yolov2.cfg yolov2.weights

# (Demo:2620): Gtk-WARNING **: cannot open display:

# Start X Window System

sudo systemctl start lightdm

detector.c

int width = find_int_arg(argc, argv, "-w", 0);

int height = find_int_arg(argc, argv, "-h", 0);

int fps = find_int_arg(argc, argv, "-fps", 0);

//int class = find_int_arg(argc, argv, "-class", 0);

width = 640;

height = 480;

fps = 1;

demo.c

cvMoveWindow("Demo", 0, 0);

if (!w || !h) {

cvResizeWindow("Demo", 640, 480);

} else {

cvResizeWindow("Demo", w, h);

}

demo.c

# NNPACK patch

...

srand(2222222);

#ifdef NNPACK

nnp_initialize();

net->threadpool = pthreadpool_create(4);

#endif

int i;

...

pthread_join(detect_thread, 0);

++count;

}

#ifdef NNPACK

pthreadpool_destroy(net->threadpool);

nnp_deinitialize();

#endif

}

● Darknet with NNPACKの変更内容

FREEWING-JP/darknet_nnpack_for_raspberry_pi3 feature/darknet-nnpack

・ Patch digitalbrain79 Darknet with NNPACK diff

最新の pjreddie版の Darknetに digitalbrain79版の NNPACKの処理を適用

・ fix detection rectangle

物体検出の四角の枠の描画位置を修正(基準座標を修正)

・ nightmare with NNPACK

nightmareに NNPACKを適用

・ fix Makefile for Newest Maratyszcza/NNPACK

最新の NNPACKライブラリは cpuinfoライブラリが必要

・ mod Makefile optimize for Raspberry Pi3

Raspberry Pi3用に Makefileのビルドオプションを変更

・ mod batchnorm_layer.c

batchnorm_layer.cの net.trainの時も NNPACK処理を適用

● NNPACKライブラリ

FREEWING-JP/NNPACK feature/bias_darknet

・ Patch digitalbrain79 Add bias when bias is not NULL

Add bias when bias is not NULL

● Darknet with NNPACK digitalbrain79版の差分

Darknet with NNPACK

NNPACK部分と ARM_NEON部分の差分。

digitalbrain79/NNPACK-darknet

digitalbrain79/darknet-nnpack

examples\detector.c

void test_detector(...)

{

...

float nms=.3;

#ifdef NNPACK

nnp_initialize();

net->threadpool = pthreadpool_create(4);

#endif

...

#ifdef NNPACK

image im = load_image_thread(input, 0, 0, net->c, net->threadpool);

image sized = letterbox_image_thread(im, net->w, net->h, net->threadpool);

#else

image im = load_image_color(input,0,0);

image sized = letterbox_image(im, net->w, net->h);

#endif

...

#ifdef NNPACK

pthreadpool_destroy(net->threadpool);

nnp_deinitialize();

#endif

}

include\darknet.h

#ifdef NNPACK

#include <nnpack.h>

#endif

typedef struct network{

...

#ifdef NNPACK

pthreadpool_t threadpool;

#endif

} network;

...

image load_image(char *filename, int w, int h, int c);

#ifdef NNPACK

image load_image_thread(char *filename, int w, int h, int c, pthreadpool_t threadpool);

#endif

...

image letterbox_image(image im, int w, int h);

#ifdef NNPACK

image letterbox_image_thread(image im, int w, int h, pthreadpool_t threadpool);

#endif

src\activations.c

#ifdef NNPACK

struct activate_params {

float *x;

int n;

ACTIVATION a;

};

void activate_array_compute(struct activate_params *params, size_t c)

{

int i;

for (i = 0; i < params->n; i++) {

params->x[c*params->n + i] = activate(params->x[c*params->n + i], params->a);

}

}

void activate_array_thread(float *x, const int c, const int n, const ACTIVATION a, pthreadpool_t threadpool)

{

struct activate_params params = { x, n, a };

pthreadpool_compute_1d(threadpool, (pthreadpool_function_1d_t)activate_array_compute, ¶ms, c);

}

#endif

src\activations.h

#ifdef NNPACK

void activate_array_thread(float *x, const int c, const int n, const ACTIVATION a, pthreadpool_t threadpool);

#endif

src\batchnorm_layer.c

#ifdef NNPACK

struct normalize_params {

float *x;

float *mean;

float *variance;

int spatial;

};

void normalize_cpu_thread(struct normalize_params *params, size_t batch, size_t filters)

{

int i;

float div = sqrt(params->variance[filters]) + .000001f;

for(i = 0; i < params->spatial; i++){

int index = batch*filters*params->spatial + filters*params->spatial + i;

params->x[index] = (params->x[index] - params->mean[filters])/div;

}

}

#endif

...

#ifdef NNPACK

struct normalize_params params = { l.output, l.rolling_mean, l.rolling_variance, l.out_h*l.out_w };

pthreadpool_compute_2d(net.threadpool, (pthreadpool_function_2d_t)normalize_cpu_thread,

¶ms, l.batch, l.out_c);

#else

normalize_cpu(l.output, l.rolling_mean, l.rolling_variance, l.batch, l.out_c, l.out_h*l.out_w);

#endif

src\convolutional_layer.c

#ifdef NNPACK

l.forward = forward_convolutional_layer_nnpack;

#else

l.forward = forward_convolutional_layer;

#endif

...

#ifdef NNPACK

void forward_convolutional_layer_nnpack(convolutional_layer l, network net)

{

struct nnp_size input_size = { l.w, l.h };

struct nnp_padding input_padding = { l.pad, l.pad, l.pad, l.pad };

struct nnp_size kernel_size = { l.size, l.size };

struct nnp_size stride = { l.stride, l.stride };

nnp_convolution_inference(

nnp_convolution_algorithm_implicit_gemm,

nnp_convolution_transform_strategy_tuple_based,

l.c,

l.n,

input_size,

input_padding,

kernel_size,

stride,

net.input,

l.weights,

NULL,

l.output,

NULL,

NULL,

nnp_activation_identity,

NULL,

net.threadpool,

NULL

);

int out_h = convolutional_out_height(l);

int out_w = convolutional_out_width(l);

int n = out_h*out_w;

if(l.batch_normalize){

forward_batchnorm_layer(l, net);

} else {

add_bias(l.output, l.biases, l.batch, l.n, out_h*out_w);

}

activate_array_thread(l.output, l.n, n, l.activation, net.threadpool);

if(l.binary || l.xnor) swap_binary(&l);

}

#endif

void forward_convolutional_layer(convolutional_layer l, network net)

{

int i, j;

fill_cpu(l.outputs*l.batch, 0, l.output, 1);

if(l.xnor){

binarize_weights(l.weights, l.n, l.c/l.groups*l.size*l.size, l.binary_weights);

swap_binary(&l);

binarize_cpu(net.input, l.c*l.h*l.w*l.batch, l.binary_input);

net.input = l.binary_input;

}

int m = l.n/l.groups;

int k = l.size*l.size*l.c/l.groups;

int n = l.out_w*l.out_h;

for(i = 0; i < l.batch; ++i){

for(j = 0; j < l.groups; ++j){

float *a = l.weights + j*l.nweights/l.groups;

float *b = net.workspace;

float *c = l.output + (i*l.groups + j)*n*m;

im2col_cpu(net.input + (i*l.groups + j)*l.c/l.groups*l.h*l.w,

l.c/l.groups, l.h, l.w, l.size, l.stride, l.pad, b);

gemm(0,0,m,n,k,1,a,k,b,n,1,c,n);

}

}

if(l.batch_normalize){

forward_batchnorm_layer(l, net);

} else {

add_bias(l.output, l.biases, l.batch, l.n, l.out_h*l.out_w);

}

activate_array(l.output, l.outputs*l.batch, l.activation);

if(l.binary || l.xnor) swap_binary(&l);

}

src\convolutional_layer.h

#ifdef NNPACK

void forward_convolutional_layer_nnpack(const convolutional_layer layer, network net);

#endif

void forward_convolutional_layer(const convolutional_layer layer, network net);

src\image.c

#ifdef NNPACK

struct resize_image_params {

image im;

image resized;

image part;

int w;

int h;

};

void resize_image_compute_w(struct resize_image_params *params, size_t k, size_t r)

{

int c;

float w_scale = (float)(params->im.w - 1) / (params->w - 1);

for(c = 0; c < params->w; ++c){

float val = 0;

if(c == params->w-1 || params->im.w == 1){

val = get_pixel(params->im, params->im.w-1, r, k);

} else {

float sx = c*w_scale;

int ix = (int) sx;

float dx = sx - ix;

val = (1 - dx) * get_pixel(params->im, ix, r, k) + dx * get_pixel(params->im, ix+1, r, k);

}

set_pixel(params->part, c, r, k, val);

}

}

void resize_image_compute_h(struct resize_image_params *params, size_t k, size_t r)

{

int c;

float h_scale = (float)(params->im.h - 1) / (params->h - 1);

float sy = r*h_scale;

int iy = (int) sy;

float dy = sy - iy;

for(c = 0; c < params->w; ++c){

float val = (1-dy) * get_pixel(params->part, c, iy, k);

set_pixel(params->resized, c, r, k, val);

}

if(r == params->h-1 || params->im.h == 1) return;

for(c = 0; c < params->w; ++c){

float val = dy * get_pixel(params->part, c, iy+1, k);

add_pixel(params->resized, c, r, k, val);

}

}

image resize_image_thread(image im, int w, int h, pthreadpool_t threadpool)

{

image resized = make_image(w, h, im.c);

image part = make_image(w, im.h, im.c);

struct resize_image_params params = { im, resized, part, w, h };

pthreadpool_compute_2d(threadpool, (pthreadpool_function_2d_t)resize_image_compute_w,

¶ms, im.c, im.h);

pthreadpool_compute_2d(threadpool, (pthreadpool_function_2d_t)resize_image_compute_h,

¶ms, im.c, h);

free_image(part);

return resized;

}

image letterbox_image_thread(image im, int w, int h, pthreadpool_t threadpool)

{

int new_w = im.w;

int new_h = im.h;

if (((float)w/im.w) < ((float)h/im.h)) {

new_w = w;

new_h = (im.h * w)/im.w;

} else {

new_h = h;

new_w = (im.w * h)/im.h;

}

image resized = resize_image_thread(im, new_w, new_h, threadpool);

image boxed = make_image(w, h, im.c);

fill_image(boxed, .5);

//int i;

//for(i = 0; i < boxed.w*boxed.h*boxed.c; ++i) boxed.data[i] = 0;

embed_image(resized, boxed, (w-new_w)/2, (h-new_h)/2);

free_image(resized);

return boxed;

}

#endif

...

image resize_image(image im, int w, int h)

{

image resized = make_image(w, h, im.c);

image part = make_image(w, im.h, im.c);

int r, c, k;

float w_scale = (float)(im.w - 1) / (w - 1);

float h_scale = (float)(im.h - 1) / (h - 1);

for(k = 0; k < im.c; ++k){

for(r = 0; r < im.h; ++r){

for(c = 0; c < w; ++c){

float val = 0;

if(c == w-1 || im.w == 1){

val = get_pixel(im, im.w-1, r, k);

} else {

float sx = c*w_scale;

int ix = (int) sx;

float dx = sx - ix;

val = (1 - dx) * get_pixel(im, ix, r, k) + dx * get_pixel(im, ix+1, r, k);

}

set_pixel(part, c, r, k, val);

}

}

}

for(k = 0; k < im.c; ++k){

for(r = 0; r < h; ++r){

float sy = r*h_scale;

int iy = (int) sy;

float dy = sy - iy;

for(c = 0; c < w; ++c){

float val = (1-dy) * get_pixel(part, c, iy, k);

set_pixel(resized, c, r, k, val);

}

if(r == h-1 || im.h == 1) continue;

for(c = 0; c < w; ++c){

float val = dy * get_pixel(part, c, iy+1, k);

add_pixel(resized, c, r, k, val);

}

}

}

free_image(part);

return resized;

}

src\maxpool_layer.c

#ifdef NNPACK

struct maxpool_params {

const maxpool_layer *l;

network *net;

};

void maxpool_thread(struct maxpool_params *params, size_t b, size_t k)

{

int i, j, m, n;

int w_offset = -params->l->pad;

int h_offset = -params->l->pad;

int h = params->l->out_h;

int w = params->l->out_w;

int c = params->l->c;

for(i = 0; i < h; ++i){

for(j = 0; j < w; ++j){

int out_index = j + w*(i + h*(k + c*b));

float max = -FLT_MAX;

int max_i = -1;

for(n = 0; n < params->l->size; ++n){

for(m = 0; m < params->l->size; ++m){

int cur_h = h_offset + i*params->l->stride + n;

int cur_w = w_offset + j*params->l->stride + m;

int index = cur_w + params->l->w*(cur_h + params->l->h*(k + b*params->l->c));

int valid = (cur_h >= 0 && cur_h < params->l->h &&

cur_w >= 0 && cur_w < params->l->w);

float val = (valid != 0) ? params->net->input[index] : -FLT_MAX;

max_i = (val > max) ? index : max_i;

max = (val > max) ? val : max;

}

}

params->l->output[out_index] = max;

params->l->indexes[out_index] = max_i;

}

}

}

#endif

void forward_maxpool_layer(const maxpool_layer l, network net)

{

#ifdef NNPACK

struct maxpool_params params = { &l, &net };

pthreadpool_compute_2d(net.threadpool, (pthreadpool_function_2d_t)maxpool_thread,

¶ms, l.batch, l.c);

#else

int b,i,j,k,m,n;

int w_offset = -l.pad;

int h_offset = -l.pad;

int h = l.out_h;

int w = l.out_w;

int c = l.c;

for(b = 0; b < l.batch; ++b){

for(k = 0; k < c; ++k){

for(i = 0; i < h; ++i){

for(j = 0; j < w; ++j){

int out_index = j + w*(i + h*(k + c*b));

float max = -FLT_MAX;

int max_i = -1;

for(n = 0; n < l.size; ++n){

for(m = 0; m < l.size; ++m){

int cur_h = h_offset + i*l.stride + n;

int cur_w = w_offset + j*l.stride + m;

int index = cur_w + l.w*(cur_h + l.h*(k + b*l.c));

int valid = (cur_h >= 0 && cur_h < l.h &&

cur_w >= 0 && cur_w < l.w);

float val = (valid != 0) ? net.input[index] : -FLT_MAX;

max_i = (val > max) ? index : max_i;

max = (val > max) ? val : max;

}

}

l.output[out_index] = max;

l.indexes[out_index] = max_i;

}

}

}

}

#endif

}

src\blas.c

#ifdef ARM_NEON

#include <arm_neon.h>

#endif

...

void scal_cpu(int N, float ALPHA, float *X, int INCX)

{

int i;

#ifdef ARM_NEON

float32x4_t alpha = vdupq_n_f32(ALPHA);

for (i = 0; i < N; i+=4) {

vst1q_f32(X + i*INCX, vmulq_f32(vld1q_f32(X + i*INCX), alpha));

}

#else

for(i = 0; i < N; ++i) X[i*INCX] *= ALPHA;

#endif

}

src\stb_image.h

#ifdef STBI_NEON

#include <arm_neon.h>

#define STBI_SIMD_ALIGN(type, name) type name __attribute__((aligned(16)))

#endif

...

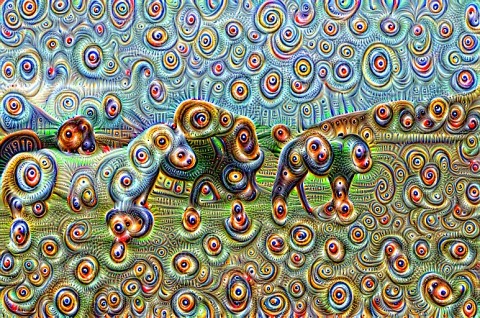

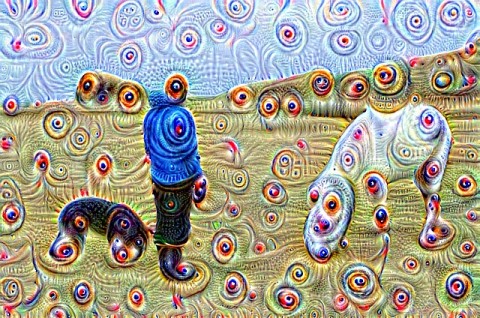

・ nightmare with NNPACK

・dog_jnet-conv_11_000000.jpg

・dog_jnet-conv_11_000001.jpg

・dog_jnet-conv_11_000003.jpg

・eagle_jnet-conv_11_000003.jpg

・horses_jnet-conv_11_000000.jpg

・horses_jnet-conv_11_000001.jpg

・horses_jnet-conv_11_000002.jpg

・horses_jnet-conv_11_000003.jpg

・person_jnet-conv_11_000000.jpg

・person_jnet-conv_11_000001.jpg

・person_jnet-conv_1_000000.jpg

・person_jnet-conv_7_000000.jpg

・person_jnet-conv_7_000001.jpg

・person_jnet-conv_8_000000.jpg

・scream_vgg-conv_10_000000.jpg

● 主な Raspberry Piの CPUの一覧

| Raspberry Zero | BCM2835 | ARM1176JZF-S | ARMv6 | ARM11 | 1 core |

| Raspberry Pi 2 | BCM2836 | ARM Cortex-A7 | ARMv7 | Cortex-A | 4 core |

| Raspberry Pi 2(V1.2) | BCM2837 | ARM Cortex-A53 | ARMv8 | Cortex-A | 4 core |

| Raspberry Pi 3 | BCM2837 | ARM Cortex-A53 | ARMv8 | Cortex-A | 4 core |

※ Raspberry Pi2 Model B V1.2(Element14製)は CPU(SoC)が BCM2836から BCM2837に変更されました。

● gccの最適化の設定

3.10 Options That Control Optimization - Using the GNU Compiler Collection (GCC)

● 3.10 Options That Control Optimization

Using the GNU Compiler Collection (GCC)

This file documents the use of the GNU compilers.

-O3

Optimize yet more. -O3 turns on all optimizations specified by -O2 and also turns on the -finline-functions, -funswitch-loops, -fpredictive-commoning, -fgcse-after-reload, -ftree-loop-vectorize, -ftree-loop-distribute-patterns, -fsplit-paths -ftree-slp-vectorize, -fvect-cost-model, -ftree-partial-pre and -fipa-cp-clone options.

-Ofast

Disregard strict standards compliance. -Ofast enables all -O3 optimizations. It also enables optimizations that are not valid for all standard-compliant programs. It turns on -ffast-math and the Fortran-specific -fno-protect-parens and -fstack-arrays.

-ffast-math

Sets the options -fno-math-errno, -funsafe-math-optimizations, -ffinite-math-only, -fno-rounding-math, -fno-signaling-nans and -fcx-limited-range.

This option causes the preprocessor macro __FAST_MATH__ to be defined.

ARM Cortex-A53

NEON advanced SIMD

DSP & SIMD extensions

VFPv4 floating point

Hardware virtualization support

● 3.18.4 ARM Options

3.18.4 ARM Options

-march=name[+extension…]

This specifies the name of the target ARM architecture. GCC uses this name to determine what kind of instructions it can emit when generating assembly code. This option can be used in conjunction with or instead of the -mcpu= option.

-march=armv7-a

-march=armv7ve

# The extended version of the ARMv7-A architecture with support for virtualization.

-march=armv7ve+neon-vfpv4

gcc: error: unrecognized argument in option ‘-march=armv7ve+neon-vfpv4’

-mtune=name

This option specifies the name of the target ARM processor for which GCC should tune the performance of the code.

-mtune=cortex-a53

-mcpu=name[+extension…]

This specifies the name of the target ARM processor. GCC uses this name to derive the name of the target ARM architecture (as if specified by -march) and the ARM processor type for which to tune for performance (as if specified by -mtune). Where this option is used in conjunction with -march or -mtune, those options take precedence over the appropriate part of this option.

-mfpu=name

This specifies what floating-point hardware (or hardware emulation) is available on the target.

The setting ‘auto’ is the default and is special. It causes the compiler to select the floating-point and Advanced SIMD instructions based on the settings of -mcpu and -march.

-mfpu=neon-vfpv4

● 3.18.1 AArch64 Options

3.18.1 AArch64 Options

These options are defined for AArch64 implementations

● gccの最適化 -O4の指定について

GCCの最適化

このガイドは、コンパイル済みコードを安全で分別のある CFLAGSと CXXFLAGSを使って最適化する手法を紹介します。また、一般的な最適化の背景にある理論について述べます。

3より高い-Oレベルはどう?

何人かのユーザーが、-O4や-O9などを使うことによってもっといいパフォーマンスを得たと誇張していますが、3より高い-Oレベルは何の効果もありません。コンパイラは-O4のようなCFLAGSも許容するでしょうが、それらは実質何もしないのです。-O3の最適化を行うだけで、それ以上の最適化はしません。

さらに証拠が必要ですか?ソースコードを試してみてください。

https://gcc.gnu.org/viewcvs/gcc/trunk/gcc/opts.c?view=markup

CODE -O ソースコード

if (optimize >= 3)

{

flag_inline_functions = 1;

flag_unswitch_loops = 1;

flag_gcse_after_reload = 1;

/* Allow even more virtual operators. */

set_param_value ("max-aliased-vops", 1000);

set_param_value ("avg-aliased-vops", 3);

}

見てのとおり、3より高いレベルであっても、結局-O3として扱われます。

Skylake Xeon GCC Compiler Optimization Tests

-O0

-O1

-O2

-O2 -march=native

-O3

-O3 -march=native

-Ofast -march=native

Tags: [Raspberry Pi], [電子工作], [ディープラーニング]

●関連するコンテンツ(この記事を読んだ人は、次の記事も読んでいます)

NVIDIA Jetson Nano 開発者キットを買ってみた。メモリ容量 4GB LPDDR4 RAM

Jetson Nanoで TensorFlow PyTorch Caffe/Caffe2 Keras MXNet等を GPUパワーで超高速で動かす!

Raspberry Piでメモリを馬鹿食いするアプリ用に不要なサービスを停止してフリーメモリを増やす方法

ラズパイでメモリを沢山使用するビルドやアプリ用に不要なサービス等を停止して使えるメインメモリを増やす

【成功版】Raspberry Piで NNPACK対応版の Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet NNPACK darknet-nnpackをソースからビルドして物体検出を行なう方法

【成功版】Raspberry Piで Darknet Neural Network Frameworkをビルドする方法

ラズパイに Darknet Neural Network Frameworkを入れて物体検出や悪夢のグロ画像を生成する

【成功版】Raspberry Piに TensorFlow Deep Learning Frameworkをインストールする方法

ラズパイに TensorFlow Deep Learning Frameworkを入れて Google DeepDreamで悪夢を見る方法

Raspberry Piで TensorFlow Deep Learning Frameworkを自己ビルドする方法

ラズパイで TensorFlow Deep Learning Frameworkを自己ビルドする方法

Raspberry Piで Caffe Deep Learning Frameworkで物体認識を行なってみるテスト

ラズパイで Caffe Deep Learning Frameworkを動かして物体認識を行なってみる

【ビルド版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning Framework

ラズパイで Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

【インストール版】Raspberry Piで DeepDreamを動かしてキモイ絵をモリモリ量産 Caffe Deep Learning

ラズパイで Caffe Deep Learning Frameworkをインストールして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piで Caffe2 Deep Learning Frameworkをソースコードからビルドする方法

ラズパイで Caffe 2 Deep Learning Frameworkをソースコードから自己ビルドする方法

Orange Pi PC 2の 64bitのチカラで DeepDreamしてキモイ絵を高速でモリモリ量産してみるテスト

OrangePi PC2に Caffe Deep Learning Frameworkをビルドして Deep Dreamを動かしてキモイ絵を生成する

Raspberry Piに Jupyter Notebookをインストールして拡張子 ipynb形式の IPythonを動かす

ラズパイに IPython Notebookをインストールして Google DeepDream dream.ipynbを動かす

Raspberry Piで Deep Learningフレームワーク Chainerをインストールしてみる

ラズパイに Deep Learningのフレームワーク Chainerを入れてみた

Raspberry Piで DeepBeliefSDKをビルドして画像認識フレームワークを動かす方法

ラズパイに DeepBeliefSDKを入れて画像の物体認識を行なう

Raspberry Piで Microsoftの ELLをビルドする方法

ラズパイで Microsoftの ELL Embedded Learning Libraryをビルドしてみるテスト、ビルドするだけ

Raspberry Piで MXNet port of SSD Single Shot MultiBoxを動かして画像の物体検出をする方法

ラズパイで MXNet port of SSD Single Shot MultiBox Object Detectorで物体検出を行なってみる

Raspberry Piで Apache MXNet Incubatingをビルドする方法

ラズパイで Apache MXNet Incubatingをビルドしてみるテスト、ビルドするだけ

Raspberry Piで OpenCVの Haar Cascade Object Detectionでリアルタイムにカメラ映像の顔検出を行なってみる

ラズパイで OpenCVの Haar Cascade Object Detection Face & Eyeでリアルタイムでカメラ映像の顔検出をする方法

Raspberry Piで NNPACKをビルドする方法

ラズパイで NNPACKをビルドしてみるテスト、ビルドするだけ

Raspberry Pi 3の Linuxコンソール上で使用する各種コマンドまとめ

ラズパイの Raspbian OSのコマンドラインで使用する便利コマンド、負荷試験や CPUシリアル番号の確認方法等も

[HOME]

|

[BACK]

リンクフリー(連絡不要、ただしトップページ以外は Web構成の変更で移動する場合があります)

Copyright (c)

2018 FREE WING,Y.Sakamoto

Powered by 猫屋敷工房 & HTML Generator

http://www.neko.ne.jp/~freewing/raspberry_pi/raspberry_pi_update_darknet_nnpack_neural_network_framework/